Hey Siri, why are humans reviewing our voice files?

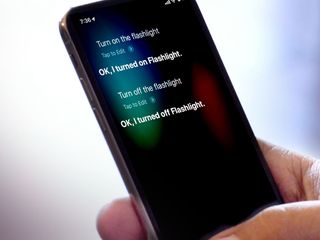

Digital assistants like Alexa, Google, and Siri use humans to help train them. This has been going on since the beginning but it's only hit the mainstream media and consciousness this year. Siri, in particular, has caused controversy because, for the last few years, Apple has been pushing privacy as a top-down, front-line feature.

So, what's going on?

Matt Day , Giles Turner , and Natalia Drozdiak writing for Bloomberg on April 10th, 2019:

Amazon Workers Are Listening to What You Tell Alexa: A global team reviews audio clips in an effort to help the voice-activated assistant respond to commands.

Obviously, the report focused primarily on Amazon

Amazon.com Inc. employs thousands of people around the world to help improve the Alexa digital assistant powering its line of Echo speakers. The team listens to voice recordings captured in Echo owners' homes and offices. The recordings are transcribed, annotated and then fed back into the software as part of an effort to eliminate gaps in Alexa's understanding of human speech and help it better respond to commands.

But, the reporters did their jobs, asked the obvious follow up question — what about other virtual assistants? — and answered that as well:

Apple's Siri also has human helpers, who work to gauge whether the digital assistant's interpretation of requests lines up with what the person said. The recordings they review lack personally identifiable information and are stored for six months tied to a random identifier, according to an Apple security white paper. After that, the data is stripped of its random identification information but may be stored for longer periods to improve Siri's voice recognition.At Google, some reviewers can access some audio snippets from its Assistant to help train and improve the product, but it's not associated with any personally identifiable information and the audio is distorted, the company says.

Lente Van Hee, Ruben Van Den Heuvel, Tim Verheyden, Denny Baert, writing for VRT NWS on July 10:

Google employees are eavesdropping, even in your living room, VRT NWS has discovered

Putting the name of your publication in the title — and in almost every graf — is tight!

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Not everyone is aware of the fact that everything you say to your Google smart speakers and your Google Assistant is being recorded and stored. But that is clearly stated in Google's terms and conditions. And what people are certainly not aware of, simply because Google doesn't mention it in its terms and conditions, is that Google employees can listen to excerpts from those recordings

But they do offer this as well:

Most recordings made via Google Home smart speakers are very clear. Recordings made with Google Assistant, the smartphone app, are of telephone quality. But the sound is not distorted in any way.

Then, just this weekend, Alex Hern, writing for The Guardian:

Apple contractors 'regularly hear confidential details' on Siri recordings.Although Apple does not explicitly disclose it in its consumer-facing privacy documentation, a small proportion of Siri recordings are passed on to contractors working for the company around the world. They are tasked with grading the responses on a variety of factors, including whether the activation of the voice assistant was deliberate or accidental, whether the query was something Siri could be expected to help with and whether Siri's response was appropriate.

Also:

Apple differs from those companies in some ways, however. For one, Amazon and Google allow users to opt out of some uses of their recordings; Apple offers no similar choice short of disabling Siri entirely.

Although that's been disputed: There is an iCloud Analytics toggle in the privacy that says it includes Siri analytics. But none of these things are crystal clear and that's largely the problem.

Here's how Amazon responded to Bloomberg:

"We take the security and privacy of our customers' personal information seriously. We only annotate an extremely small sample of Alexa voice recordings in order [to] improve the customer experience. For example, this information helps us train our speech recognition and natural language understanding systems, so Alexa can better understand your requests, and ensure the service works well for everyone."We have strict technical and operational safeguards, and have a zero tolerance policy for the abuse of our system. Employees do not have direct access to information that can identify the person or account as part of this workflow. All information is treated with high confidentiality and we use multi-factor authentication to restrict access, service encryption and audits of our control environment to protect it."

And Google to VRT News:

"This happens by making transcripts of of a small number of audio files", Google's spokesman for Belgium says. He adds that "this work is of crucial importance to develop technologies sustaining products such as the Google Assistant." Google states that their language experts only judge "about 0.2 percent of all audio fragments". These are not linked to any personal or identifiable information, the company adds

And Apple to The Guardian:

"A small portion of Siri requests are analysed to improve Siri and dictation. User requests are not associated with the user's Apple ID. Siri responses are analysed in secure facilities and all reviewers are under the obligation to adhere to Apple's strict confidentiality requirements." The company added that a very small random subset, less than 1% of daily Siri activations, are used for grading, and those used are typically only a few seconds long.

Even though Bloomberg reported on it all back in April, and I'm fairly sure it's been talked about off and on for over a decade, The VRT and now The Guardian's pieces really caught fire. Especially the latter.

Maybe because, unlike the others, it front-loaded the part about sex, crime, and business. Or maybe just because it came out after Apple started putting big privacy billboards up in Las Vegas, Toronto, and Hamburg.

So, while some say Apple is being held to a higher or different standard here, it's not by anyone other than Apple themselves.

At issue is whether Amazon, Google, and Apple properly disclose the process — in other words, explicitly say other humans are part of the process — whether they effectively allow you to opt-out and not just of the service itself but specifically the human AQ, if you want to, and whether or not that should be a specific opt-in instead.

That's on top of the larger arguments about whether or not security white papers and privacy policies or terms of service agreements are even human discoverable and legible to begin with, and at the opposite extreme, whether the concept of privacy in the digital age is viable or beneficial.

And, even more broadly and, I'd argue, more importantly, we're all still only at the beginning of this debate.

It's about location and behavioral and voice and video data capture and analysis right now but, soon enough, the entire world — including all of us — are going to be constantly ingested, all the time, by a wide range of sensors for AR, VR, and autonomous technologies.

It won't be very different from living on the Grid or in the Matrix, and if we don't figure out how to handle personal privacy now it's going to be even more problematic with everything coming next.

To help me sort through all of this, I have voice-first expert Brian Roemmele on the line. Hit play on the video above to watch our discussion.

Apple is putting up billboards, literal billboards, saying how seriously they take our privacy, yet look at where we are with these Siri stories, both from back in April and just now, this weekend.

Facebook and Google say they're making their products much more private but so far they only seem to mean private from developers — developers who compete with them on their own platforms. And ongoing investigations and Facebook's recent $5 billion fine make exactly zero dent in their policies or with their investors.

Some find this unacceptable and demand changes and penalties severe enough to compel changes. Other find the very concept of privacy in the data age ludicrous, even constraining.

So, we've summarized the articles, the accusations, the concerns, the responses, and the dismissals, and we've talked about why it's currently done this way and how it could be done better in the future.

Now I want to hear from you. What do you think about all of this, especially privacy, the right or ridiculousness of it, now, today?

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.

Most Popular