iPhone 11 Face ID vs. Google Pixel 4 Face Unlock: FIGHT!

Back in 2017, Apple introduced Face ID on the iPhone X. It was the first real biometric facial geometry identification scanner. It couldn't do multiple registrations like Touch ID, but what it did, it did even better — including and especially making authentication feel almost transparent.

Now, Google has just released Face Unlock on the Pixel 4. The basic biometric facial geometry identification system is pretty much identical to Face ID. It adds some extra hardware and software for extra convenience, though, but some of that is region-dependent and it's also missing a key aspect of operational security. At least for now.

So, which one is better and why? Let's find out.

Face ID vs. Face Unlock: Evolution

Both Apple and now Google have abandoned fingerprint authentication for facial geometry. Yeah, I know — some people really want both. But, full-on depth-sensing camera systems are still relatively expensive components. So, having that, plus an in-display fingerprint sensor that actually works reliably and securely, bumps up the bill of goods and the price along with it.

Since the iPhone 11 already starts at $699 and the Pixel 4 at $799, and people, often the same people, are already complaining that's too high, all we can do for now is dance with the biometrics they brung us.

At least as best as we can. Google hasn't published much about how, exactly, Face Unlock works and, based on all the reviews I watched and read, they didn't say much if anything about it, either.

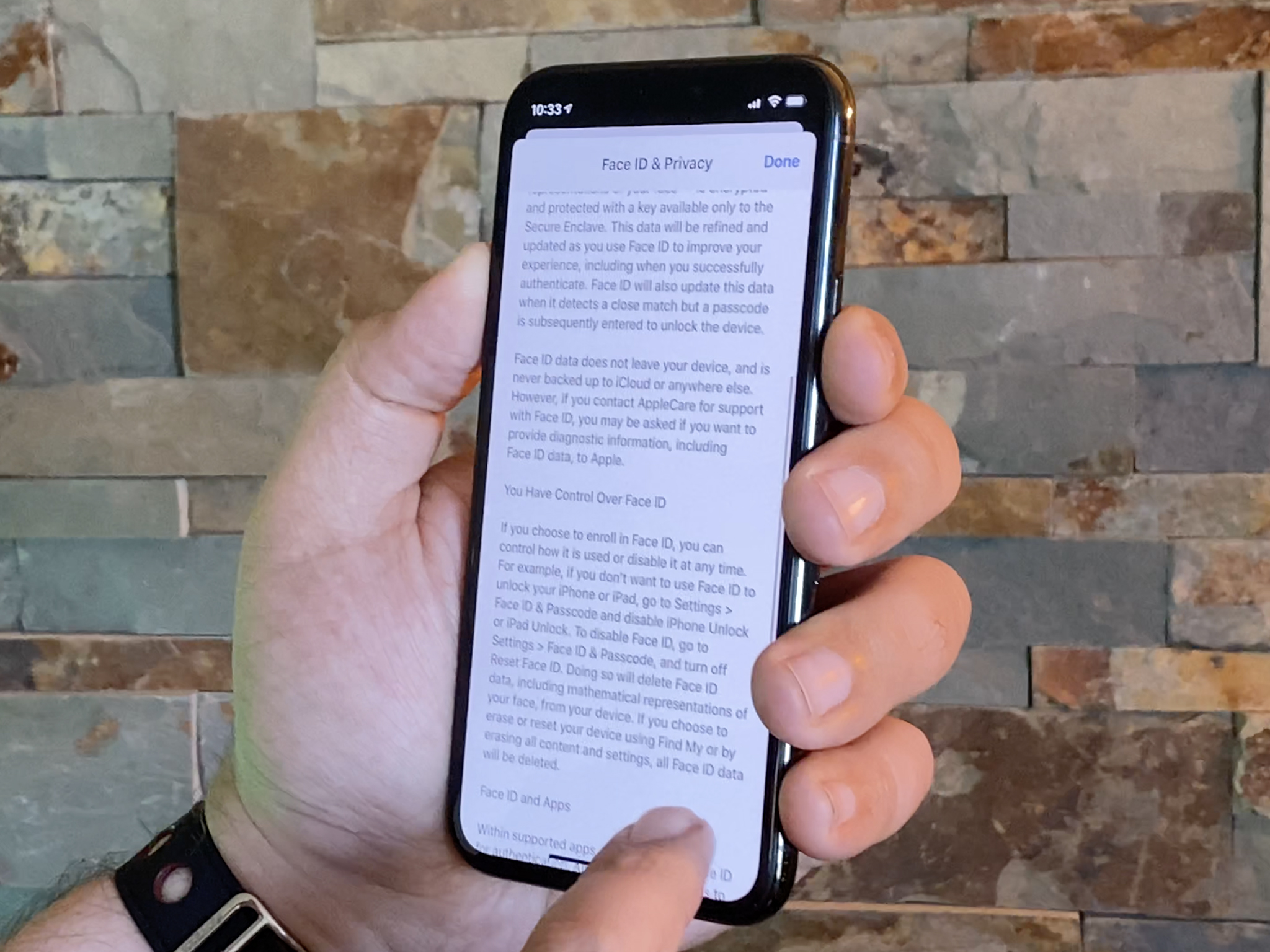

That's in stark contrast to Apple, who did extensive briefings following the event and published white paper level details on Face ID shortly thereafter.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

For the purposes of this video, since Google is using such similar technology, I'm going to assume they're also using a similar process. If and when they choose to or are pressured to elaborate, I'll update. Cool?

Face ID vs. Face Unlock: Registration

You have to register your facial geometry — in other words, scan in the data — in order to set up and start using Face ID or Face Unlock for authentication.

Apple's interface for this is really elegant. Tap to get started. Turn your head. Tap again. Turn your head again. And… done.

On the hardware side, flood illuminators cover your face in infrared light so that the system has a canvas to work against, even in the dark. Then, projectors splash a grid of over 30,000 contrasting dots on your face, along with a device-specific pattern as well. That makes it harder to spoof the system digitally or physically.

Next, an infrared camera capture 2D images and 3D depth data to essentially create a model of your facial geometry. Apple crops the images as tightly as possible, so they don't keep any information about where you are or what's behind you in the frame. Then, they encrypt the data, and send it over a locked-down hardware channel to the secure element on the A-series chipset. Originally, that was the A11 Bionic. Now, the A13 Bionic.

There, a secure portion of Apple's Neural Engine Block transforms it into math, but also retains the original data so the Face ID neural networks can be updated without requiring you to re-enroll your facial geometry each time.

Neither the data nor the math derived from it ever leaves the Secure Enclave, are never backed up, and never hit any servers anywhere, ever.

And that's it. You're done.

Almost. Apple gives you the option of setting up an alternate appearance at any time. You do it by going through the registration process a second time. So, for example, you can still use it even if you make yourself up very differently for work, for fun, for personal reasons, or any reason at all.

Google's set up interface is remarkably similar in design but different in implementation. It's not as elegant but it is more verbose and less repetitive. Kinda.

First, they give you lots of text up front detailing both universal issues with facial geometry scanning, like the inability to distinguish between twins or some close relatives, as well as issues specific to the Pixel, which we'll get into in a minute.

Secondly, you only have to turn your head once. But, it's super fussy about how you do it: Center your head better. Turn less. Turn slower! But, if you follow the directives and just keep on keeping on, it eventually finishes anyway.

The Pixel has two infrared cameras, one on each side, which should make for a more robust reading of the dot patterns. Google also has their own Titan M Security chip, which should function similarly to Apple's Secure Enclave, and Pixel Neural Core, which should function similarly to Apple's Neural Engine Block.

I don't know enough about the silicon architecture to tell if Apple doing everything in a single SoC and Google doing everything in discreet co-processors makes for any advantages or disadvantages, or if it's all just functionally the same.

Google does say they don't store the original images the way Apple does, only the models, but that neither the original images nor the models are sent to Google or shared with any other Google services or apps. Which is good, because Google's handling of face data has been controversial at times to say the least.

At that point, you're registered and done.

Now, I really like how Apple's setup seems far less sensitive to small deviations in angle and speed. Theoretically, Google only having you turn your head once is simpler but because it can complain more, it can end up taking just as long and can be more frustrating to complete. Especially the first time you go through the process.

I love that Google discloses, so right up front, the issues with facial geometry scanning as part of the process. Apple mentioned things like the evil twin attack on stage when they first announced Face ID, and Google did not but who knows how many people saw or remembered that. This, as part of set up, everyone using it will see it every time they set it up.

Both let you tap through for additional information, with Apple being more verbose here and Google, briefer.

Face ID vs. Face Unlock: Authentication

When you want to unlock, you wake up your iPhone either by raising it up or tapping the screen. The accelerometer then fires up the system and it goes through a process similar to registration.

With Face ID, attention detection makes sure your eyes are open and you're actively and deliberately looking at your iPhone (you can turn this off for accessibility reasons if you need to). Otherwise, it won't unlock. That helps prevent surprise or incapacitation attacks, where someone else tries to use Face ID to unlock your phone without your consent.

The flood illuminator and dot projector then go to work. This time, though, the infra-red camera captures only a randomized sequence of 2D images and depth data, again to help counter spoofing attacks.

The neural engine then converts that to math and compares it to the math from your initial scan.

This isn't the simpler pattern matching of fingerprint scans. It requires neural networks to determine if it's actually your facial geometry or not your facial geometry, including rejecting attempts to fake your facial geometry.

If you're not familiar with how machine learning and neural networks work, imagine Tinder for computers. Yes. No. No. No. Yes. Yes. No. No. Hotdog. Something like that.

They're not coded like traditional programs. They're trained, more like pets. And, once you let them loose, they carry on without you.

They're also adversarial. So, imagine a Batman network trying to let you into your phone but only you. And a Joker network, continuously trying new ways to get past the Batman network, continuously making the Batman network better.

It's amazingly cool stuff.

Anyway, if the math matches, a "yes" token is released and you're on your way. If it doesn't, you need to try again, fall back to passcode, or stay locked out of your iPhone.

Face ID may store the math from successful unlock attempt and even from unsuccessful unlock attempts where you immediately followed up by entering the passcode. That's to help the system learn and grow with changes to your face or look that might happen over time, even the more dramatic ones, like shaves, haircuts, even injuries.

After it's used the data to augment a limited number of subsequent unlocks, Face ID discards the data and, potentially, repeats the augmentation cycle again. And again.

Because the technology was so new at the time, Apple focused on making it as consistent and reliable as possible from right-side-up portrait mode orientation, and about 45 degrees off-axis either way. That includes the physical angling of the TrueDepth camera system.

They've since made it work 360 degrees on the iPad Pro but, sadly, haven't seen fit to bring that functionality to the iPhone yet, leaving it far more frustrating to unlock while lying down.

The unlock also, literally, only unlocks the phone. To open it, you have to take the second step of swiping up from the very bottom of the lock screen. Swipe up too high, and you get notifications instead, which is bewilderingly inconsistent with the swipe down from the top left corner that reveals notifications when the iPhone is open.

Face Unlock on the Pixel is again very similar in the broad strokes but different in the details.

Thanks to MotionSense, originally called Project Soli. It's an actual Daredevil-style radar sense chip that can detect when you're reaching for your Pixel and fire up the Face Unlock system so it's ready to go before you even start to lift or tap it.

It also works from any angle, like the iPad, so you can unlock it even if you pick it up upside down, or you're lying down at the time.

Unfortunately, Google either couldn't or wouldn't enforce attention for Face Unlock at launch. So, it currently works even if your eyes are closed, and that means it is susceptible to surprise or incapacitation attacks — in other words, if you're asleep, restrained, or unconscious. Google has said they'll add the feature in a future update but it might take a while.

Again, Google hasn't elaborated on their specific process, but it's safe to assume the flood illuminator and dot projectors fire, the dual infrared cameras capture all or some of your facial geometry, and then send it to the Titan M security chip for comparison to the models stored there.

At that point, if they match, the Pixel unlocks and opens. If you'd rather see your lock screen instead of going back to what you were previously using, you can choose that option in settings.

I really like that it's an option, though.

There are two different kinds of workflows. One is all about the notifications. You just want to see your Lock screen and everything that might be important but you don't want to dive into and maybe get distracted by all the apps on your phone.

The iPhone is good at that because Face ID, while it doesn't open the phone, does expand recent notifications.

The Pixel has an always-on display, though, and lock screen information very similar to Apple Watch complications, and that takes glanceability to a whole other level. It's something I've been asking for on iOS for years now.

The second type of workflow is when you don't care about notifications and just need to get into your phone and get something done as fast as possible.

The Pixel is again great for this because you can choose to go right into your phone.

It's not perfect, because it can't read your mind and determine which workflow you want and just let you do either one at any given time. You have to pick the one you use more often and stick with it until you change it.

But, at least it lets you change. The iPhone doesn't. And, again, it's something I've been asking for for years.

Not having the option to require open eyes and attention just feels irresponsible on Google's part, though.

Yes, biometrics are more username than password, and yes, fingerprints are subject to the same kind of attacks — although you have 10 potential fingers and only 1 potential face. But any security expert worth their credentials will tell you defense is done in depth.

You throw as many roadblocks and bumps in the attack path as possible. That's your job. Your you had one job.

For now, Google is pointing anyone concerned towards their Lockdown option. You have to enable it in Settings > Display > Advanced > Lock screen display, then tap Show Lockdown Option.

Once you've done that, you can hold down the power button and then tap Lockdown to temporarily disable biometrics.

Even here, though, Apple is more elegant. To temporarily disable biometrics at any time, you don't have to flip any settings, you just squeeze the power and volume buttons at the same time and you're locked down.

Theoretically, MotionSense should allow you to unlock your Pixel without having to touch it, and it does. Practically speaking, though, the radar field around the Pixel is so short-range that it doesn't make a huge difference right now. Unless your hands are covered in gravy or icing or whatever. But, that's still a legit difference…

Depending on where you live. MotionSense operates on the 60hz band, and that hasn't been approved in a lot of geographies. Including India. Live or travel to one of those places, and MotionSense turns off.

On both the iPhone and Pixel, you can also trigger unlock from a distance by triggering Siri or Google Assistant, which I personally like better, and that even gets around the iPhone's lack of simultaneous unlock and open.

Face ID vs. Face Unlock: Integration

Both the iPhone's Face ID and the Pixel's Face Unlock are available to developers so they can use them to secure apps, from password managers to banking clients to… everything in between.

Apple was really clever in how they implemented this. When they initially rolled out the Touch ID application programming interface, or API, they made it less specifically about fingerprints and more generally about biometrics. For developers and users, they abstracted most of the differences away into a singe Local Authentication Framework.

So, aside from gaining the ability to adjust text strings to properly label Face ID vs. Touch ID, it quote-unquote just worked for many if not most apps.

With Face Unlock, there's a greater degree of complexity. For apps to work, they have to adopt Android's BiometricPrompt APT. If an app is using the old API, it will only look for fingerprint scans, not facial geometry scans, and just drop you back to password mode.

Currently, only a handful of apps support them, but that should change over time. Hopefully rapidly.

Face ID vs. Face Unlock: Conclusion

It's tempting to call facial biometric identity scanning a draw between Apple and Google, iPhone and Pixel. And the truth is, both do some things I really wish the other would adopt as well.

Apple's set up is slicker but requires two steps. Google's complains so much, though, it can make one step feels as long as three.

The repetition no doubt makes the scan more robust but I'm not sure the user actually has to know or tap through for that to happen. Likewise, Google should hush up and be make registration less finicky.

Apple explained Face ID better at its introduction and has since detailed it to high degree in white papers, where Google's remains something of a black box, but one that does disclose its limitations every time you set it up.

I'd love to see a white paper from Google and a more info button from Apple during setup. That would handle disclosure without mucking up the experience.

Neither the lack of 360-degree scanning on iPhone or the attention requirement on Pixel will be problems for most people most of the time, but they shouldn't be problems for anyone any of the time.

In an ideal world, the iPhone would work like the iPad and Pixel and just unlock regardless of orientation and the Pixel would work like the iPhone and iPad and require you to be looking at it before it will unlock. Same for the iPhone and having the option to unlock and open all at once.

And, you know, Google had two years to learn all this from Face ID, and Apple has had two years to implement all this in Face ID, so unless they deliberately don't want this stuff — which is hard to imagine — it's tough to understand why they don't all do it all.

Again, Google has a theoretical advantage thanks to the MotionSense radar chip, where available, but their overall process hasn't been disclosed or tested to the extent Apple's has.

Lack of an attention requirement aside, we just don't know how secure, private, and adaptive the neural networks are. Ethical issues over the way it was trained aside, Google being Google, we can assume the very best, but no is hammering on it the way Face ID was hammered on at launch. You know, every blogger and their hired VFX teams. At least not yet.

And, they really should. Go hard on Apple. Pretty please. It's great for Apple customers. But go hard on everyone else as well. That's great for all customers.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.