AMD Radeon — is it 'pro' enough for the MacBook Pro?

As soon as Apple announced the new MacBook Pro, there were rumblings over the 15-inch version's AMD Polaris graphics being underpowered and insufficient for a "pro" machine, and that NVIDIA's lower power Pascal options would've been a better choice. On PC, in the Windows world where DirectX exists, I would agree with you. But this is Apple's world. The question to whether or not a GPU is sufficient has to be looked at in the context of why this machine exists in the first place.

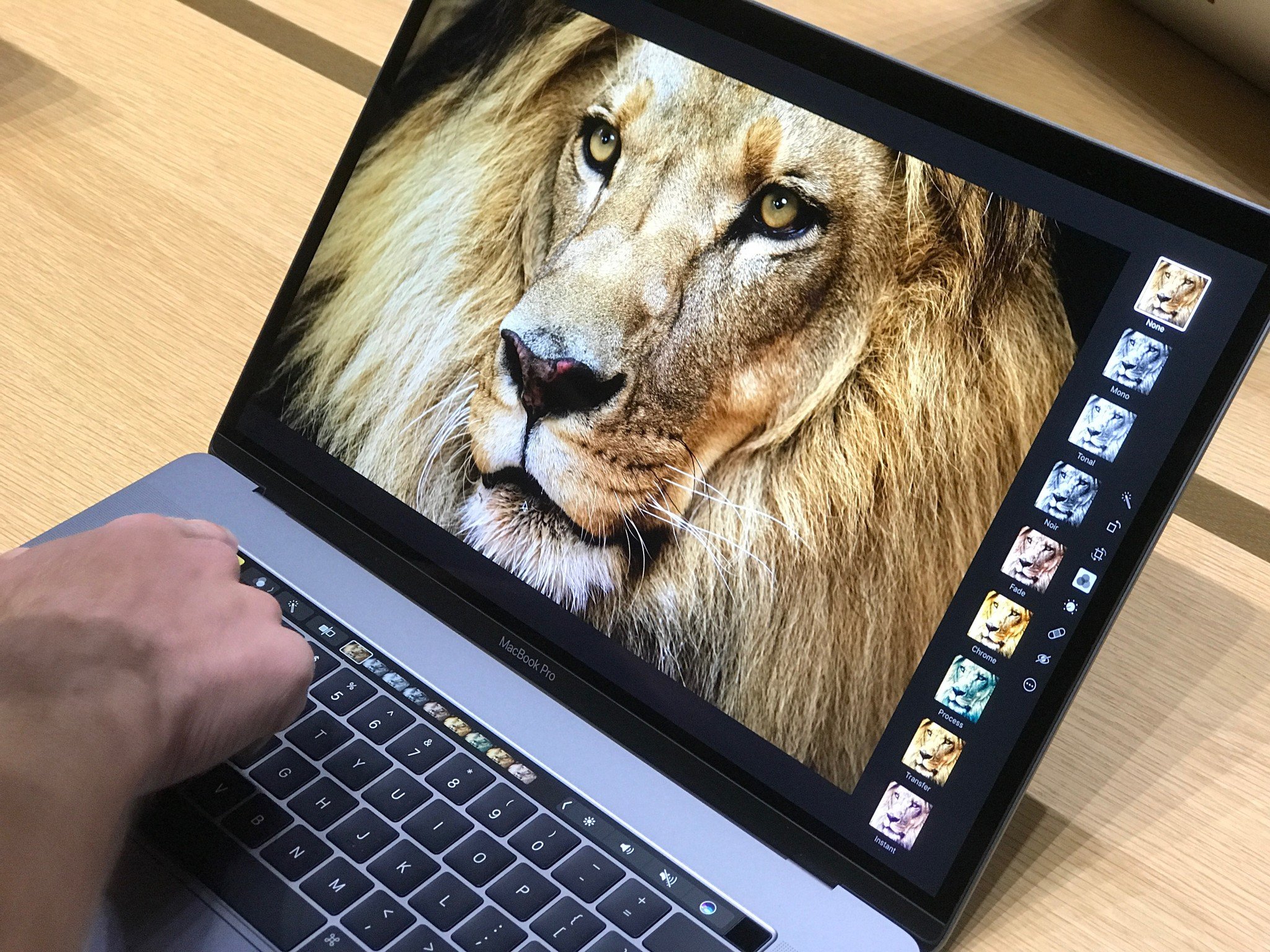

The MacBook Pro is not and has never been built for high-end gaming. EVER. Gaming requires a different targeting of hardware than what Apple does with this machine. The MacBook Pro was designed for video editors, photo editors, and music producers who are not always at a desk and who want elevated performance in comparison to the MacBook (non Pro).

The MacBook Pro is not and has never been built for high-end gaming.

Apple wants to become self-sufficient with APIs that control its OS, as well as the hardware architecture it employs. In Apple's perfect world, the software and silicon would be 100% designed by them. AMD's GPUs give them the closest shot at achieving this, especially alongside Apple's own graphics framework, Metal.

And that's why it's highly unlikely that we will ever see another NVIDIA GPU in any Apple product again.

So, why no NVIDIA?

NVIDIA understands the customer base that provides the best opportunity to deliver value and to make money. Outside of the high end Tesla/Titan range of GPUs, you have the GeForce. Traditionally, as a developer, you would want to get the best performance out of DirectX 11/12 and, more recently, Vulcan.

These APIs are designed to run on thousands of configurations. Vulcan and DX12 are optimized more than ever, but still don't match what a developer could do if they had more access to the hardware. Why does this matter? It is the basis for understanding why NVIDIA consumer GPUs behave in the way that they do.

NVIDIA GPUs are awesome for gaming on Windows, which is primarily why they were designed. Apple did not design the MacBook Pro for gaming. So, NVIDIA spends a lot of time and money optimizing its business for a scenario that is the opposite of Apple's goals. The additional benefits such as data centers, deep learning, and other technology that NVIDIA excels in is a result of its hard work in the gaming arena over the last 20 years. As a whole, modern consumer NVIDIA GPUs (and their driver stack) are designed to run DirectX games and CUDA applications as fast and efficiently as possible in a Windows environment.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

And Apple has Metal?

Yup. Because macOS doesn't have DirectX, but instead has Apple's own Metal, the playing field changes. Metal was developed from the ground up by Apple to be fast and efficient in the way that it feels is beneficial to its customers. While I'm unable to disclose their names, a few colleagues who are game developers for large organizations talk about their experience working with NVIDIA.

Metal was developed from the ground up by Apple to be fast and efficient in the way that it feels is beneficial to its customers.

NVIDIA is a class organization and is very supportive of its customers and developers. If you decide to leave the umbrella of CUDA (programming model for nNVIDIA GPUs), though, then you're on your own. Some "items" —features — that are afforded to developers are actually not built into NVIDIA GPUs to be used directly and in the traditional way. NVIDIA's world class engineers are able to bind those pieces together with CUDA, and for games, GameWorks.

NVIDIA has pushed many resources into CUDA, and the developers who ignore it lose those features and functionality. Its hardware is designed in such a way that CUDA compliments it, and that's a good thing... for everyone but Apple.

If you want to get the most out of an NVIDIA GPU, you need to use CUDA. I'm told that no matter who you are, NVIDIA will not help you if you decide to go "lower than CUDA," and will not technically support that journey.

How does AMD help?

AMD has similar goals to NVIDIA but is in a different situation financially and competitively. While AMD may not have the fastest consumer GPUs in linear speed, it won't stop you or discourage you from programming straight to the absolute lowest level of the hardware. You know, to the metal! As a matter of fact, it's encouraged.

AMD won't stop you or discourage you from programming straight to the absolute lowest level of the hardware.

AMD's Core Graphics Next (CGN) architecture is more flexible and open than any NVIDIA architecture from Fermi - Pascal. When I say "flexible", I mean the ability to develop your own path of how you want to use the GPU. Because of this flexibility, a developer, in this case Apple, could develop an API on top of a CGN-type GPU with very little restrictions, while using the silicon as efficiently as possible.

That brings us back to Metal?

Exactly. Apple's goal is to deliver power-efficient, fast, light machines, tuned exactly to their specific use cases. AMDs CGN is Apple's only free-from-fixed-function hardware on the Mac. Intel delivers fixed function x86 chips, NVIDIA would deliver highly optimized CUDA chips, but AMD delivers a chip with few fixed functions and an open platform. Apple can have its way with AMD GPUs, allowing them to give way to high-end performance with what I call "mid-range Windows parts."

But wait! Doesn't Metal work on NVIDIA GPUs right now?

Yep. But there's only so much Apple would likely be willing to do in order to move forward with any GPUs from NVIDIA because of the issue of "getting to the metal" for the reasons described above.

Will the 2016 MacBook pro with AMD Polaris graphics be able to perform at a "high-end" level?

Think of it like this: For a long time, Apple licensed designs from PowerVR to develop its GPUs inside of its iOS devices. Those devices were usually at the top of their class, outperforming most Android devices using the exact same design or similar designs. This was due to their solid implementation. Anytime you have hardware as flexible as AMD's, the stack on top of that hardware is essentially like developing a custom GPU (in theory). You have the ability to control nearly everything going in and out of that chip, as well as memory manipulation at the lowest level.

That's what AMD gives Apple on the Mac.

So, yes, the new MacBook Pro will deliver performance on a high-end level in video editing with Final Cut Pro in comparison to a Windows machine running Adobe Premiere by a large margin. It will also drive 4K and 5K displays, even dual 5K displays. It will not be a bottleneck for performance and will perform as a pro.

If you don't believe me, go try one out for yourself.