How to archive websites and online documents on macOS

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

Do you need to download a large quantity of online documentation for your work or university studies but have limited internet access? Or perhaps do you simply want to be able to locally store web documents so you can parse them with desktop tools? On macOS, you can easily archive any freely accessible online URL (or an entire subdomain if you have the disk capacity!) with free and open source software (FOSS) in one simple terminal command. Here's how!

The wget command

The wget command is network downloader that can follow and archive HTTP, HTTPS, and FTP protocols. It's designated as a "non-interactive" command because you can initiate the program and leave it to do its work without any other user interaction. The wget manual explains it this way:

Wget can follow links in HTML, XHTML, and CSS pages, to create local versions of remote web sites, fully recreating the directory structure of the original site. This is sometimes referred to as "recursive downloading." While doing that, Wget respects the Robot Exclusion Standard (/robots.txt). Wget can be instructed to convert the links in downloaded files to point at the local files, for offline viewing.

Options galore

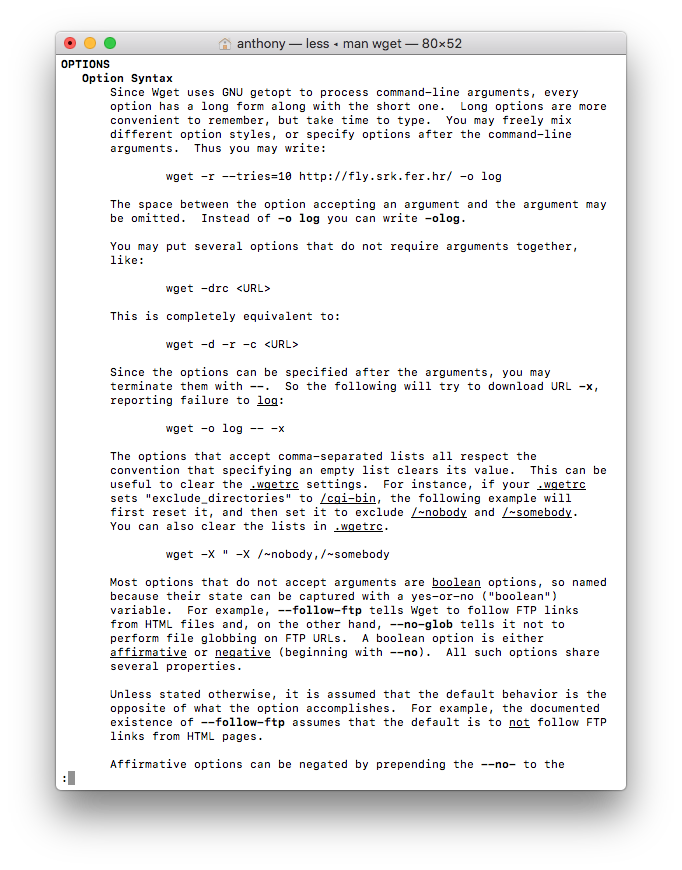

Since web protocols can be complicated, wget has a myriad of options to deal with that complexity. Need to archive only the documents on the first two pages of a website? There's an option for that. Need to use a personal login for getting access to specific directories? There's another option for that. Luckily, installing wget via the Brew package manger (explained briefly below) will also install the wget instruction manual. You can access this manual from the terminal by typing man wget and pressing enter. You can then scroll through the document as needed to find help on any option available.

Using wget

Using wget is simple. You fire up the terminal in macOS, enter wget URL-YOU-WANT-TO-ARCHIVE and hit enter. Without any other options, wget will only retrieve the first link level of the URL you've entered. If for example, you wanted to archive up to six links deep (make certain you have enough disk space!) and also convert the archived files into locally linkable files to browse on your computer, you'd do the following.

- Open terminal.

- Type wget --recursive --level=6 --convert-links http://URL-YOU-WANT-TO-ARCHIVE.

- Press enter.

Wget will now download files from the URL, following links six levels down and save the pages and documents on your hard drive so that they can be locally linked and viewed. Each link level will be nested in it's own folder and subsequent subfolder as per the original website's configuration.

Getting wget

You can download and compile wget from the FOSS maintainers directly or you can install the Brew package manager and simply use the brew install wget command in the terminal to have it done automatically for you. You can check out our article on installing Brew for more information.

Final comments

Some of you may be wondering on why I bother with command line programs when a I can likely find a nice GUI program that does the same thing. The answer is simplicity and convenience. I can quickly run a command in the terminal without the need for a large graphical program to start. I can schedule a command to run at a later time. I can create a script to run a command depending on various triggers. The flexibility of the command line trumps GUIs in some cases. On top of that, there are so many free software commands out there that you might as well give them a try and see what you've been missing.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Do you know any commands that might be good for us to know? Let us know your thoughts in the comments.