Apple introduces new child safety protections to help detect CSAM and more

What you need to know

- Apple commits to add extra protection for children across its platforms.

- New tools will be added in Messages to help protect children from predators.

- New tools in iOS and iPadOS will help detect CSAM in iCloud Photos.

It's an ugly part of the world we live in, but children are often the target of abuse and exploitation online and through technology. Today, Apple announced several new protections coming to its platforms — iOS 15, iPadOS 15, and macOS Monterey — to help protect children and limit the spread of CSAM, collectively known as Apple Child Safety.

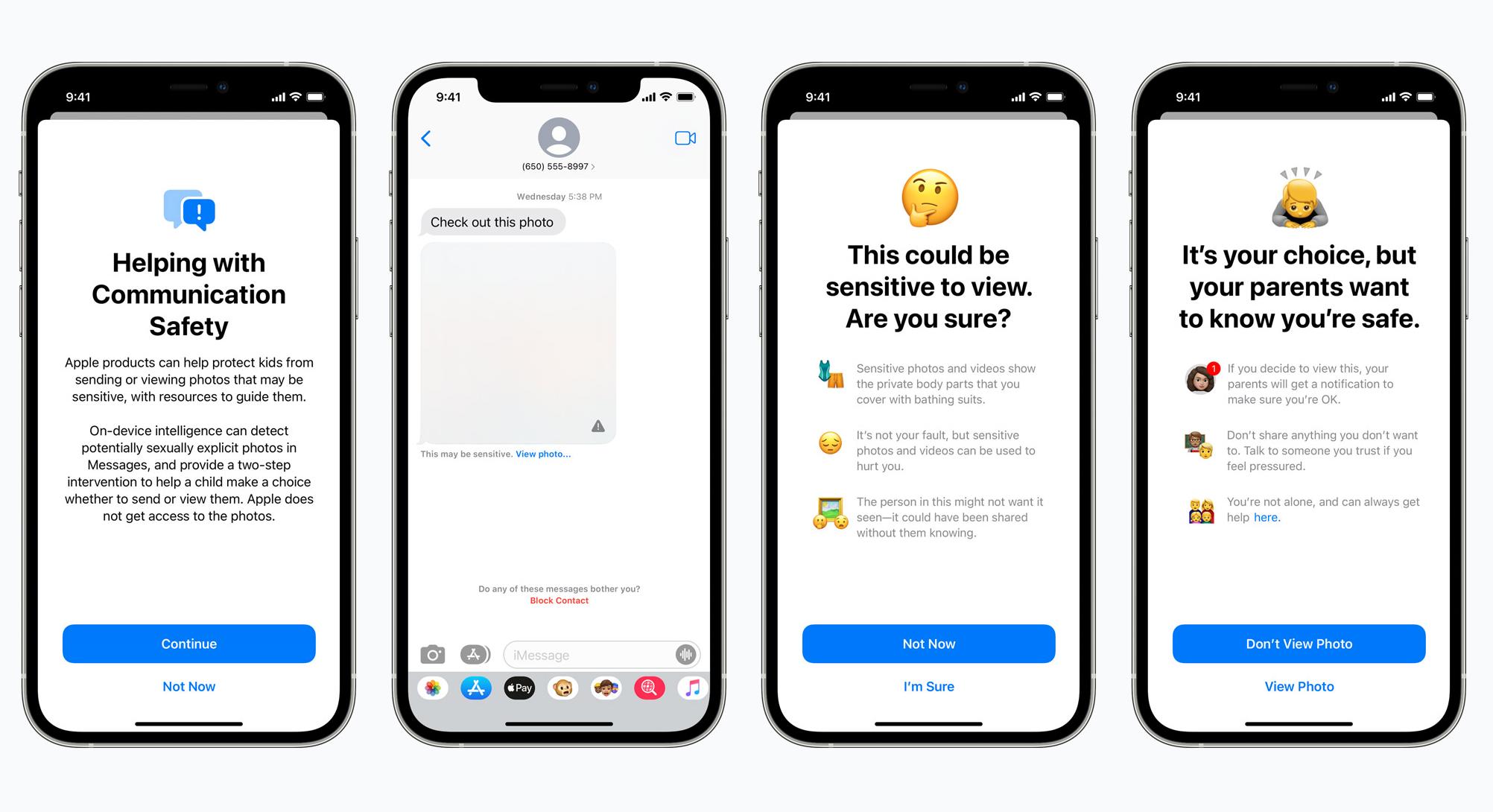

The Messages app will be getting new tools to warn kids and their parents when they receive or send sexually explicit photos. If an explicit photo is sent, the image will be blurred, and the child will be warned. On top of being warned about the contact, they will also be presented with resources and reassurances that it is okay not to view the photo. Apple also states parents will be able to be notified that their child may not be okay.

" As an additional precaution, the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it."

Apple also reassures that these new tools in Messages use on-device machine learning to identify the images in a way that does not allow the company to access the messages.

CSAM detection

Another big concern Apple is addressing is the spread of CSAM (Child Sexual Abuse Material). New technology in iOS 15 and iPad OS 15 will allow Apple to detect know CSAM images stored in iCloud Photos and report those images to the National Center for Missing and Exploited Children.

" Apple's method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users' devices."

The exact processes can be complicated, but Apple assures the method has an extremely low chance of flagging content incorrectly.

"Using another technology called threshold secret sharing, the system ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content. The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account."

Siri and search updates

Lastly, Apple also announced that Siri and Search would provide additional resources to help children and parents stay safe online and get help if they find themselves in unsafe situations. And on top of that, Apple says there will be protections for when someone searches for queries related to CSAM.

These updates are expected in iOS 15, iPadOS 15, watchOS 8, and macOS Monterey later this year.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Luke Filipowicz has been a writer at iMore, covering Apple for nearly a decade now. He writes a lot about Apple Watch and iPad but covers the iPhone and Mac as well. He often describes himself as an "Apple user on a budget" and firmly believes that great technology can be affordable if you know where to look. Luke also heads up the iMore Show — a weekly podcast focusing on Apple news, rumors, and products but likes to have some fun along the way.

Luke knows he spends more time on Twitter than he probably should, so feel free to follow him or give him a shout on social media @LukeFilipowicz.