How to determine if your streaming music's audio quality is worth paying for

A lot of music streaming services boast different bit rates to make their service more appealing than the next. Spotify, Beats Music, and others even boast up to 320 kbps over cellular. It eats a heck of a lot of data but for audiophiles, good audio quality while streaming music is a must. Unfortunately in my experience, mileage greatly varies from what's actually advertised. So if you're curious what your service really is streaming over cellular, here's how to find out right from your iPhone!

How to tell what bit rate your iPhone is streaming music at over cellular

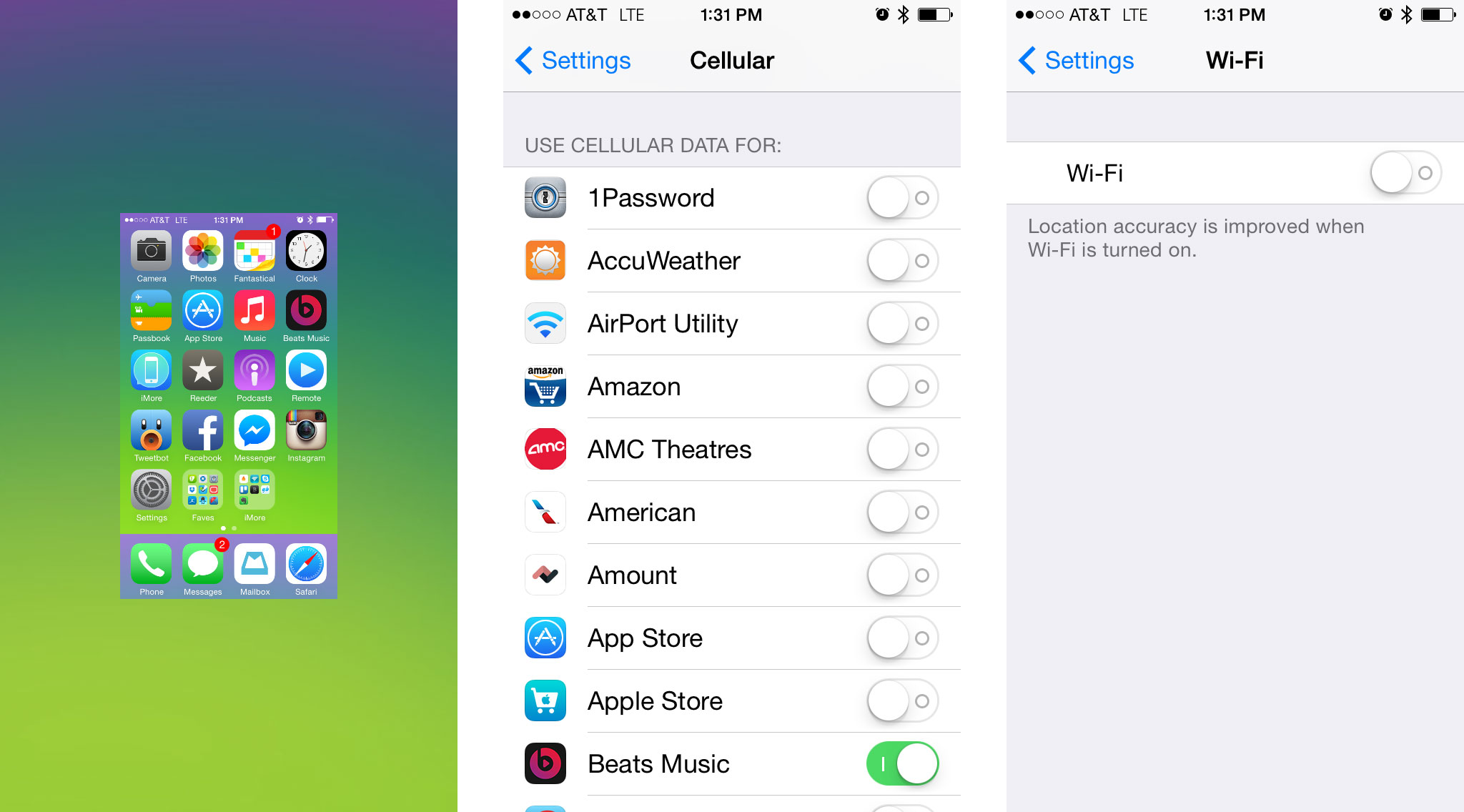

Before actually testing the bit rate of your chosen music service, it's important to make sure your results aren't skewed by other apps polling data. To do this, you need to make sure all apps are killed from multitasking, cellular data is turned off for everything except your streaming app, and WiFi is disabled. To turn off cellular data for all other apps, go to Settings > Cellular and turn cellular data Off for everything but the streaming app you want to test.

Doing the above steps will prevent data from being skewed during testing.

Finding a reference point

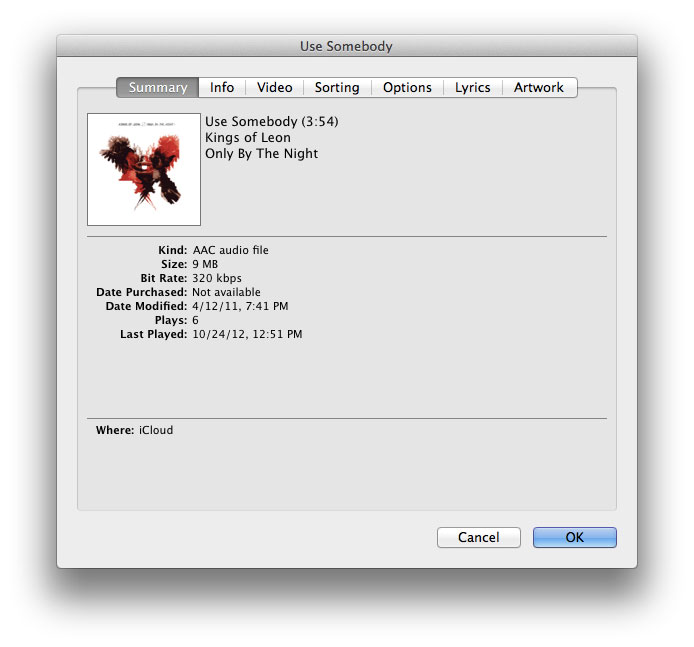

Once you've done the above you now need to find a reference song that is available across all the streaming services you'd like to test. I do this by checking my iTunes Library for a song I already own and viewing the info on it. If possible, look for a track that is at least 256 kbps, 320 if you have any. All purchased content from iTunes will be 256 kbps.

In my example, I'm going to use a track called "Use Somebody" by Kings of Leon that has a bit rate of 320 kbps. It is 3 minutes and 54 seconds long and is 9 MB. The important number here is the 9 MB. Feel free to use the same reference song as me if you'd like, or pick your own. You just need to know the bit rate and how large it is.

Now that we have our reference, we can start our testing.

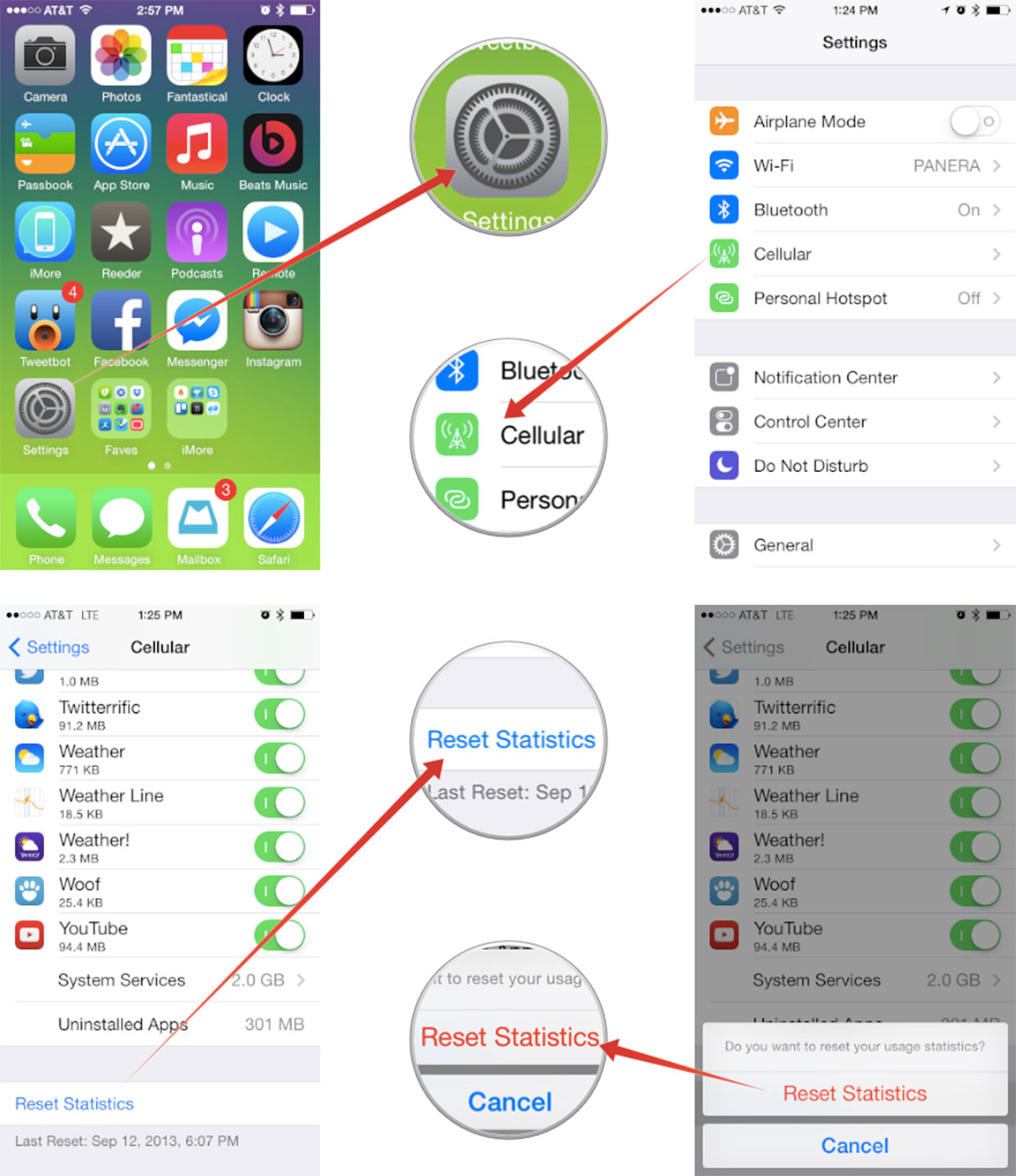

- To start, launch the Settings app on your iPhone or iPad while on cellular data.

- Now tap on Cellular.

- Scroll all the way to the bottom and tap on Reset Statistics.

- On the next screen, just verify you'd like to reset statistics.

- Your cellular data usage for the current period should now read 0 bytes.

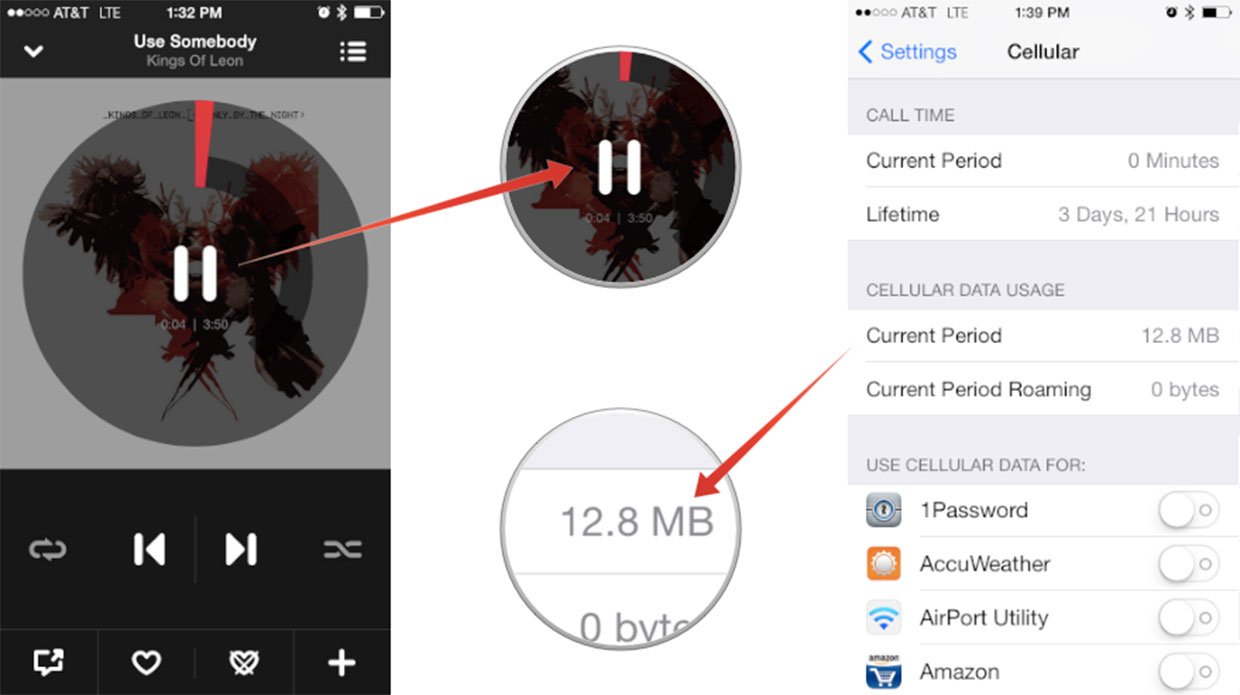

- Now launch your streaming music service and play the track over cellular that you chose as your reference song. Make sure you play it all the way through from beginning to end and stop it once it's over so it doesn't go on to another track.

- Now go back into your Settings.

- Tap on Cellular and view how many MB show up under Current Period. This is how many MB it took to stream that one reference track.

- Compare it with your reference point and you now have a very good idea of what the actual bit rate is for the streaming music service you tested.

As you can see in my above example, 12.8 MB is about right for an MP3 format track that's around 4 minutes in length if streaming truly is around 320 kbps. Also, if your music streaming app has audio quality options, make sure that you change those to highest possible. You can tweak and change them too so you can see the difference between what they're considering low and high quality if you'd like.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

That's pretty much it! It may not be the most scientific way in the world but it definitely works and seems to give pretty accurate results. If you tried it, let us know in the comments what service you tested over what network and what your results were like!

iMore senior editor from 2011 to 2015.