Making the iPhone camera accessible for the blind

Someone who can't see may still want to share photos with family, friends, and connections who can. That's why offering a Camera app that's accessible to those with limited or no vision is so important. Everyone should have the option of taking pictures of the people and places that matter to them. And yes, in the age of social media, everyone should have the option of taking a selfie. That's the essence of inclusivity.

Embarrassingly, before it was pointed out to me a couple of weeks ago, it hasn't occurred to me that Apple had added VoiceOver, the company's screen reader technology, to the camera. I hadn't even considered that aspect of accessibility. But Apple had, and they'd taken steps to make it work.

Making the camera accessible

The first step in making the Camera app accessible is making Camera.app accessible. In other words, making sure everyone can get to it whenever they need it.

You can navigate to Camera using VoiceOver, but Apple's made it even easier. Simply tell Siri "Open the camera". Even "Siri, take a selfie" works. It doesn't automagically switch it to the front-facing mode or take a picture, though—at least not yet—but it gets you where you want to be.

When VoiceOver is enabled, not only will it read out the buttons, controls, and options available to you, but it will tell you the settings and the orientation of the camera. That might sound redundant, because we know how we're holding it, but sometimes the interface doesn't switch when the phone switches and sideways pictures result. Having a voice confirmation is not just the equivalent of looking at the camera icon and making sure it's pointing up, it's a proactive protection. Same with high dynamic range and flash settings.

Camera. HDR. Automatic. Landscape.

VoiceOver will then use face detection to tell you how many people are in the frame. It will also say if a face is small, and where in the frame a face or faces are located incase you want to try and better center them. When you move, it will tell you the new framing, so you can figure out if you're getting closer to the shot you want.

One face. Small face. Bottom left corner.

Making photos accessible

Taking a photo is only the first step. You also need to be able to do something with the photos you take. That can begin with "Siri, launch Photos". (With iOS 9, Siri will also be able to search photos as well—"Show me photos from September in San Francisco!".)

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

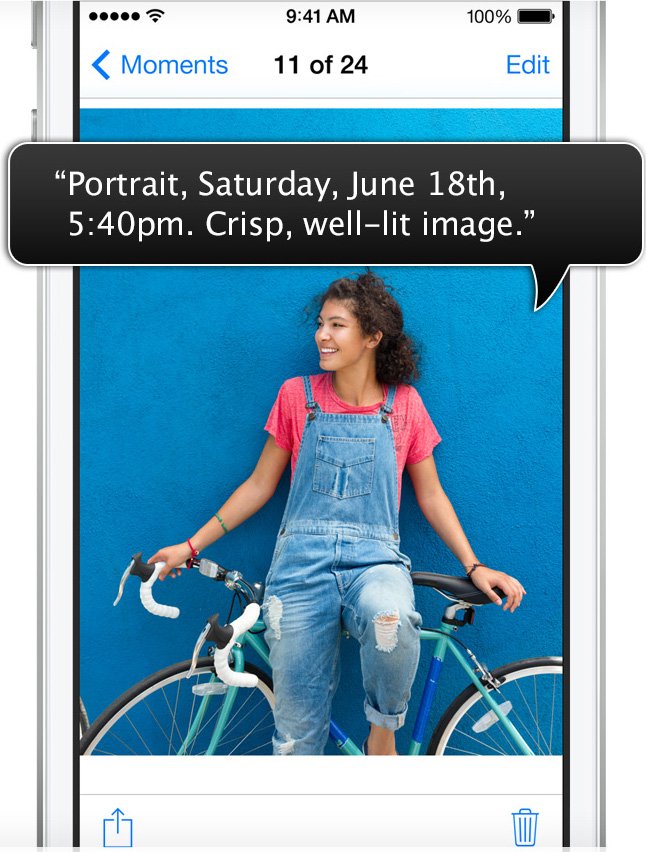

From there, in addition to the standard functionality, VoiceOver will tell you when a photo was taken. It will also tell you the orientation, and whether or not the image is crisp or blurry (focus) and bright or dim (exposure).

Photo. Landscape. August 2nd. 12:52pm. Crisp. Bright image.

You can go through the collection, moments, or albums views, tap away, and get given a brief audio description of each and every photo. That way, before you share any images over Messages or Mail, or to Instagram or Facebook, you can get a better sense of which image it is and whether or not it might be good enough for you to send or post.

Making accessibility for everyone

Accessibility technology is important. Whether or not every implementation works for everyone all the time, that it's being given relentless consideration and attention is important. Not because it's something nice to do but because it's something that must be done.

It's something that can potentially benefit everyone. Everyone ages, everyone is at risk of allergic reaction, accident, infection, surgery, or sports-related injury. Everyone, directly or through a family member or friend, has the chance of experiencing the need for better visual accessibility. And everyone benefits from apps being made better because accessibility is considered.

Earlier this year Apple won the 2015 Hellen Keller Achievement Award for VoiceOver. That wasn't a pat on the back for a job well done, it was a firm push forward to keep on doing it.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.