Apple publishes Child Safety FAQ to address CSAM scanning concerns and more

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- Apple unveiled controversial new Child Safety measures last week.

- The company has now posted an FAQ to tackle some questions and concerns about the policy.

- In particular it seems keen to address the difference between CSAM scanning and a new communication safety feature in Messages.

Apple has published a new FAQ to tackle some of the questions and concerns raised regarding its new Child Safety measures announced last week.

The new FAQ accompanies a series of technical explanations about recently-announced technology that can detect Child Sexual Abuse Material uploaded to iCloud Photos, as well as a new feature that can use machine learning to identify if a child sends or receives sexually explicit images. In the new FAQ Apple appears particularly concerned with establishing the difference between these two new feautures, a snippet:

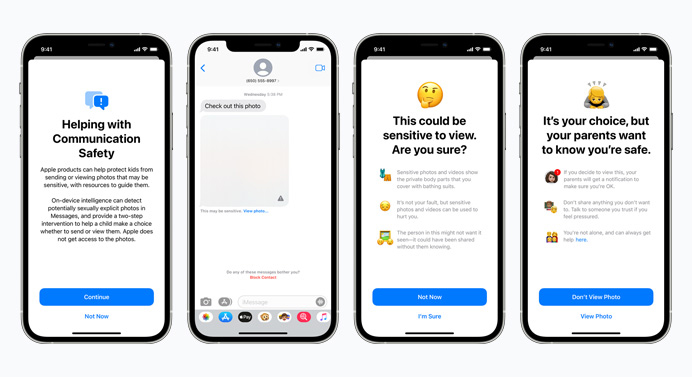

Communication safety in Messages is designed to give parents and children additional tools to help protect their children from sending and receiving sexually explicit images in the Messages app. It works only on images sent or received in the Messages app for child accounts set up in Family Sharing. It analyzes the images on-device, and so does not change the privacy assurances of Messages. When a child account sends or receives sexually explicit images, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view or send the photo. As an additional precaution, young children can also be told that, to make sure they are safe, their parents will get a message if they do view it. The second feature, CSAM detection in iCloud Photos, is designed to keep CSAM off iCloud Photos without providing information to Apple about any photos other than those that match known CSAM images. CSAM images are illegal to possess in most countries, including the United States. This feature only impacts users who have chosen to use iCloud Photos to store their photos. It does not impact users who have not chosen to use iCloud Photos. There is no impact to any other on-device data. This feature does not apply to Messages

Questions also cover concerns about Messages being shared with law enforcement, if Apple is breaking end-to-end encryption, CSAM images, scanning photos, and more. It also addresses whether CSAM detection could be used to detect anything else (no), and whether Apple would add non-CSAM images to the technology at the behest of a government:

Apple will refuse any such demands. Apple's CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government's request to expand it. Furthermore, Apple conducts human review before making a report to NCMEC. In a case where the system flags photos that do not match known CSAM images, the account would not be disabled and no report would be filed to NCMEC

Apple's plans have raised concerns in the security community and have generated some public outcry from public figures such as NSA Whistleblower Edward Snowden.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Stephen Warwick has written about Apple for five years at iMore and previously elsewhere. He covers all of iMore's latest breaking news regarding all of Apple's products and services, both hardware and software. Stephen has interviewed industry experts in a range of fields including finance, litigation, security, and more. He also specializes in curating and reviewing audio hardware and has experience beyond journalism in sound engineering, production, and design.

Before becoming a writer Stephen studied Ancient History at University and also worked at Apple for more than two years. Stephen is also a host on the iMore show, a weekly podcast recorded live that discusses the latest in breaking Apple news, as well as featuring fun trivia about all things Apple. Follow him on Twitter @stephenwarwick9