Apple blasted over lack of child exploitation prevention days after scrapping controversial CSAM plan

New laws in Australia mean companies must share information about their methods...

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

The world's first-ever report from Australia's eSafety Commissioner says that companies including Apple are not doing enough to tackle child sexual exploitation on platforms like iOS and iCloud, just days after Apple announced plans to scrap a controversial child sexual abuse material scanning tool.

The commission sent legal notices to Apple, Meta (Facebook and Instagram), WhatsApp, Microsoft, Skype, Snap, and Omegle earlier this year, which requires companies to answer detailed questions on how they are tackling child sexual exploitation on their platforms under new government powers.

“This report shows us that some companies are making an effort to tackle the scourge of online child sexual exploitation material, while others are doing very little,” eSafety Commissioner Julie Inman Grant said in a statement Thursday.

Apple and Microsoft in the crosshairs

Today's release singled out Apple and Microsoft for not attempting "to proactively detect child abuse material stored in their widely used iCloud and OneDrive services, despite the wide availability of PhotoDNA detection technology." The report further highlights Apple and Microsoft "not use any technology to detect live-streaming of child sexual abuse in video chats on Skype, Microsoft Teams or FaceTime, despite the extensive use of Skype, in particular, for this long-standing and proliferating crime. "

The report also unearthed wider problems "in how quickly companies respond to user reports of child sexual exploitation and abuse on their services." Snap got to reports in an average time of four minutes, meanwhile, Microsoft took an average of 19 days. Apple doesn't even offer in-service reporting, and was criticized for making users "hunt for an email address on their websites – with no guarantees they will be responded to."

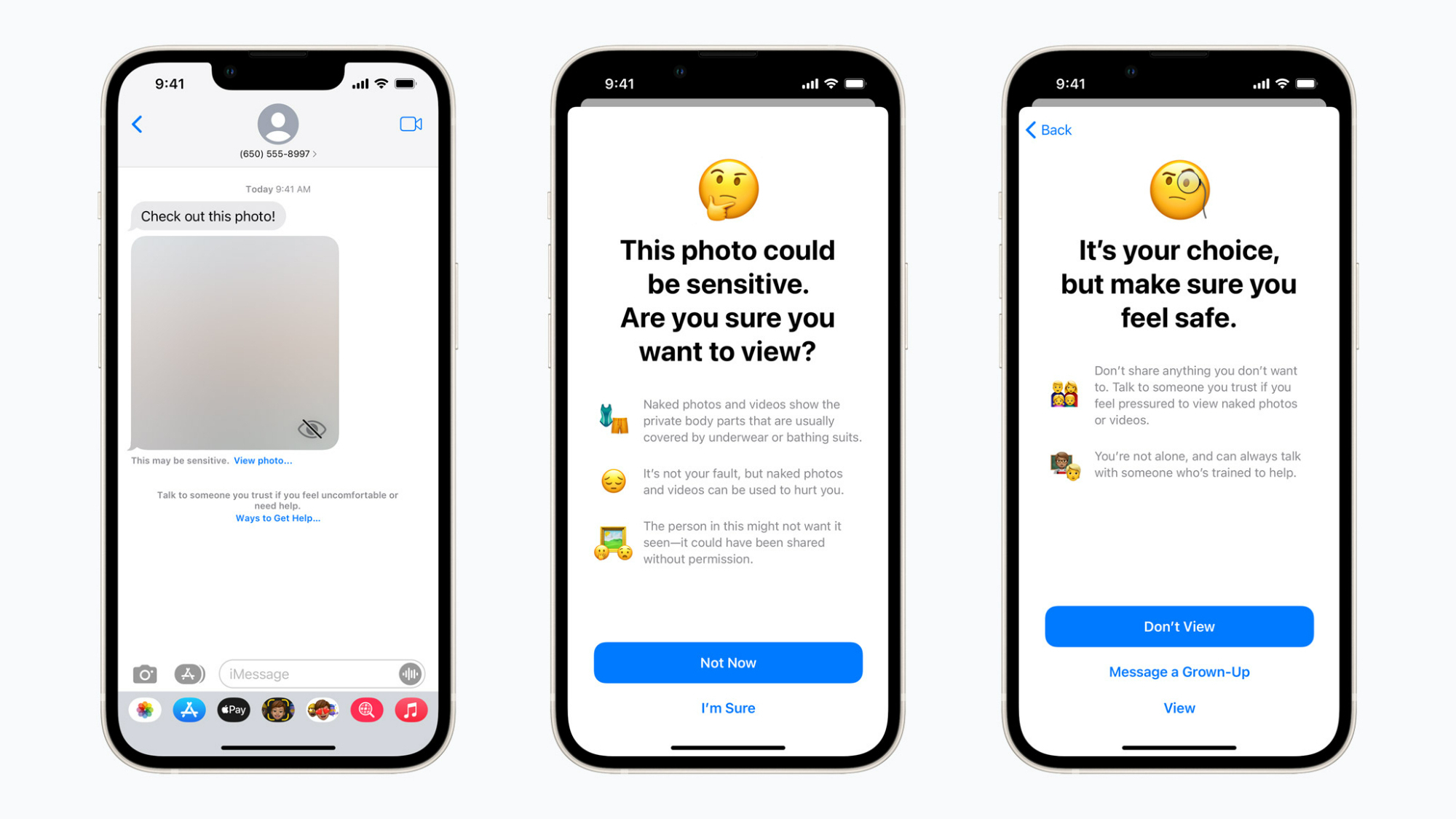

Apple tried to implement exactly this sort of CSAM scanning last year, but its controversial plan to scan the hashes of images uploaded to iCloud against a database of known CSAM material was met with wide backlash from security experts and privacy commentators, forcing Apple to delay the plans. Apple does use image hash matching in iCloud email through PhotoDNA. The company confirmed on December 7 it had scrapped plans to move forward with its CSAM detection tool. In a statement at the time, Apple said "children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all.”

In a statement to the report, Apple said "While we do not comment on future plans, Apple continues to invest in technologies that protect children from CSEA (child sexual exploitation and abuse)."

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Stephen Warwick has written about Apple for five years at iMore and previously elsewhere. He covers all of iMore's latest breaking news regarding all of Apple's products and services, both hardware and software. Stephen has interviewed industry experts in a range of fields including finance, litigation, security, and more. He also specializes in curating and reviewing audio hardware and has experience beyond journalism in sound engineering, production, and design.

Before becoming a writer Stephen studied Ancient History at University and also worked at Apple for more than two years. Stephen is also a host on the iMore show, a weekly podcast recorded live that discusses the latest in breaking Apple news, as well as featuring fun trivia about all things Apple. Follow him on Twitter @stephenwarwick9