How Google is copying Apple's Face ID for the Pixel 4

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

I've gone over the origin of "great artists steal" before. You know, the line the tech industry often credits to Steve Jobs who credited it to Pablo Picasso. TL;DR it comes from poets who wanted to extol the virtue not of lazily reproducing what came before but of drawing inspiration from what is to take the next step forward and create what will be. In other words, don't just copy. Copy and improve.

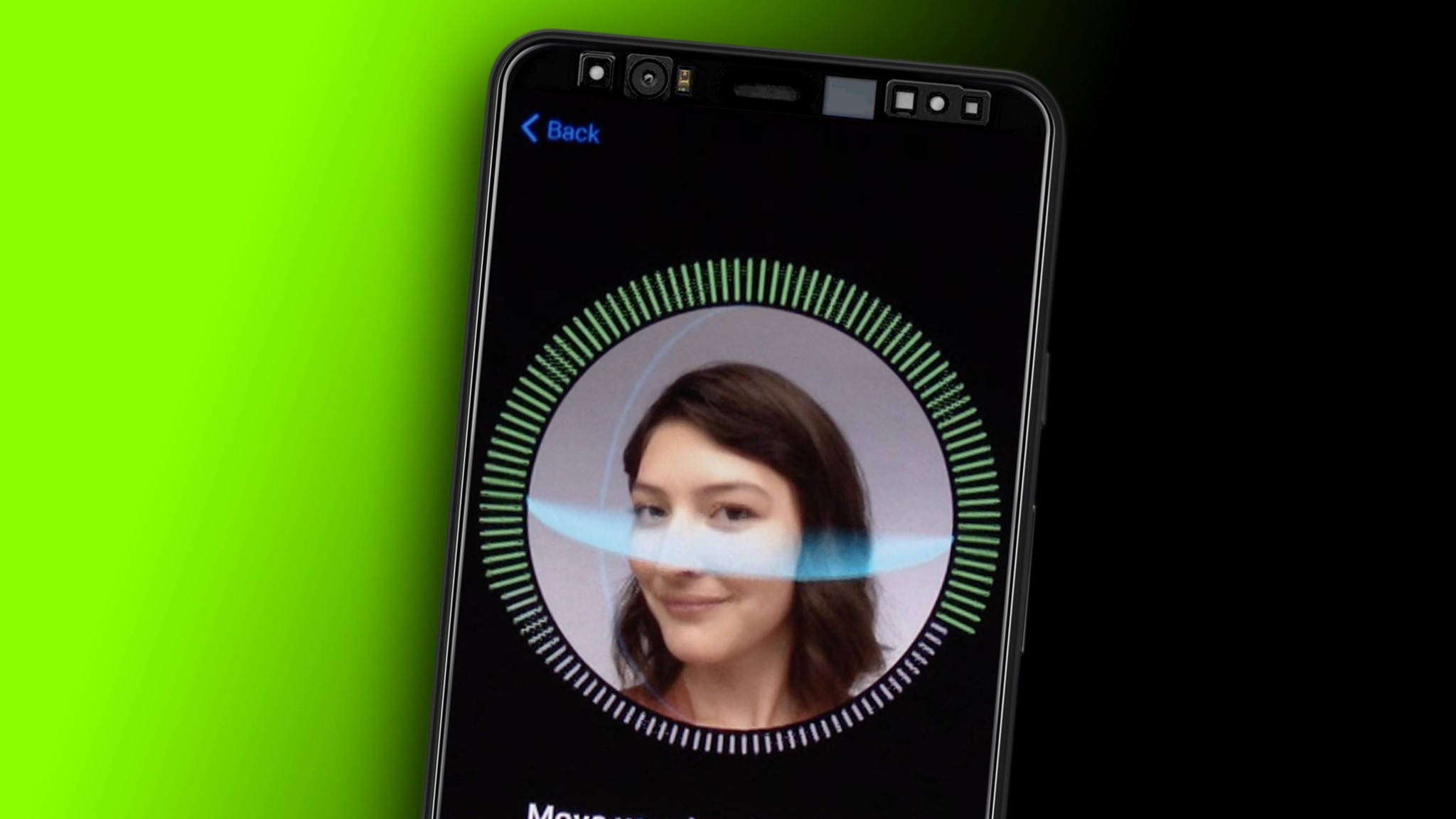

So, while some people got all super finger pointy and Mountain View start your photocopiers when Google basically announced a duplicate of Face ID will be coming to the Pixel 4 this late fall, I got kinda hella interested and for that very reason.

And yes, Google is already actively previewing not just renders of the new, very iPhone 11 looking camera system, but the very Face ID looking biometric facial geometry sensor. Don't get me started.

Well, since there seems to be some interest, here you go! Wait 'til you see what it can do. #Pixel4 pic.twitter.com/RnpTNZXEI1Well, since there seems to be some interest, here you go! Wait 'til you see what it can do. #Pixel4 pic.twitter.com/RnpTNZXEI1— Made By Google (@madebygoogle) June 12, 2019June 12, 2019

The post post meta meta world

We've had companies basically unbox and review their own products before, so why not leak them now too, right? I mean, we're in the post-post meta media age where for the lulz is now codified in marketing manuals, and there are few if any norms left, so why not just have fun with it all?

More seriously, though, by Google's own admission, last fall's Pixel 3 flagship isn't selling in any significant quantities, so they likely fear no Osborne affect, and that grants them a certain zero F's left to lose freedom to tease the Pixel 4 in a way certainly Apple and probably Samsung and other big volume phone sellers wouldn't have ever even considered doing, at least not until this. If it works.

Google's Face Unlock

Google calls it Face unlock but, based on their blog post, the system seems architecture almost identically to Face ID.

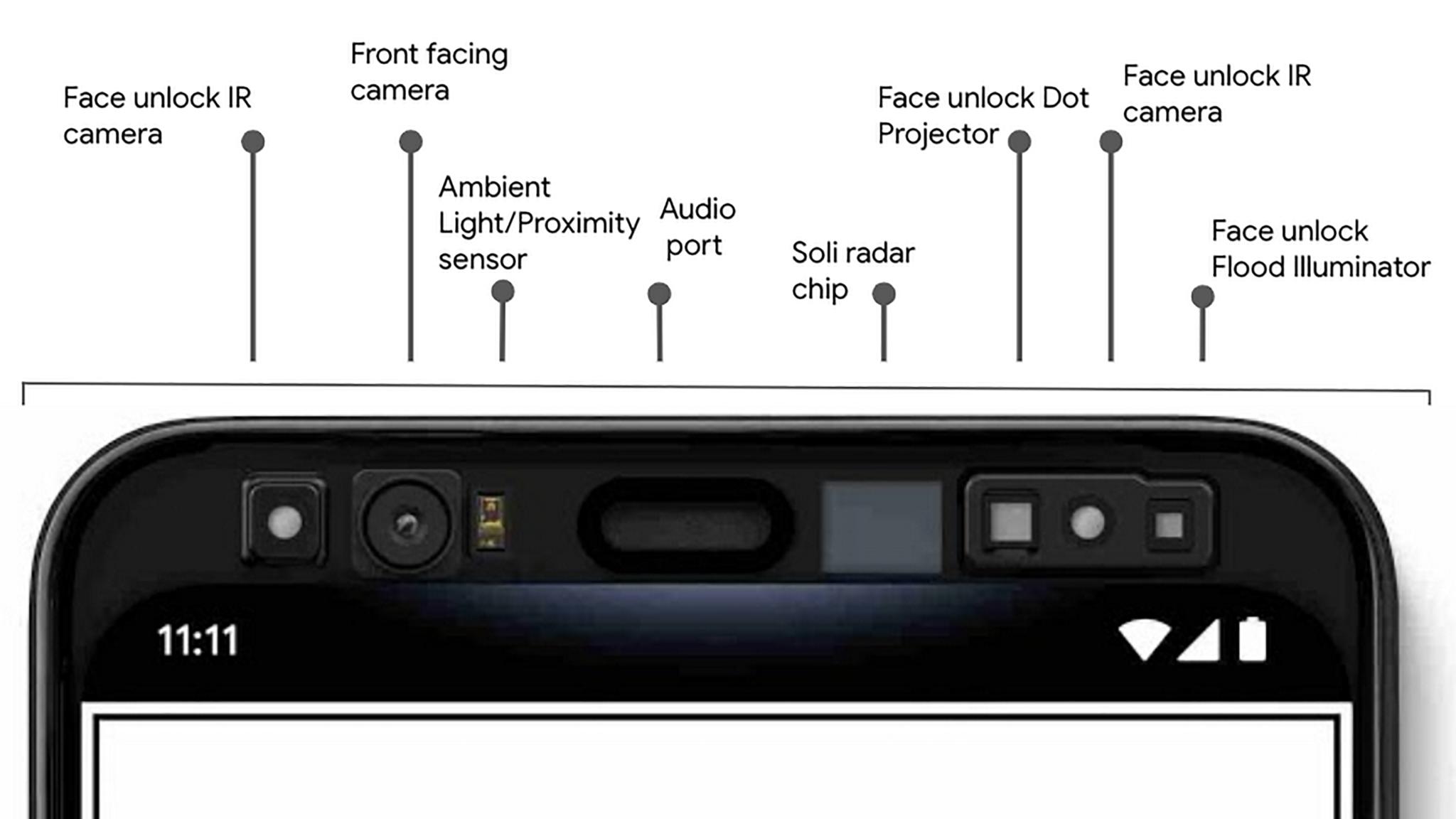

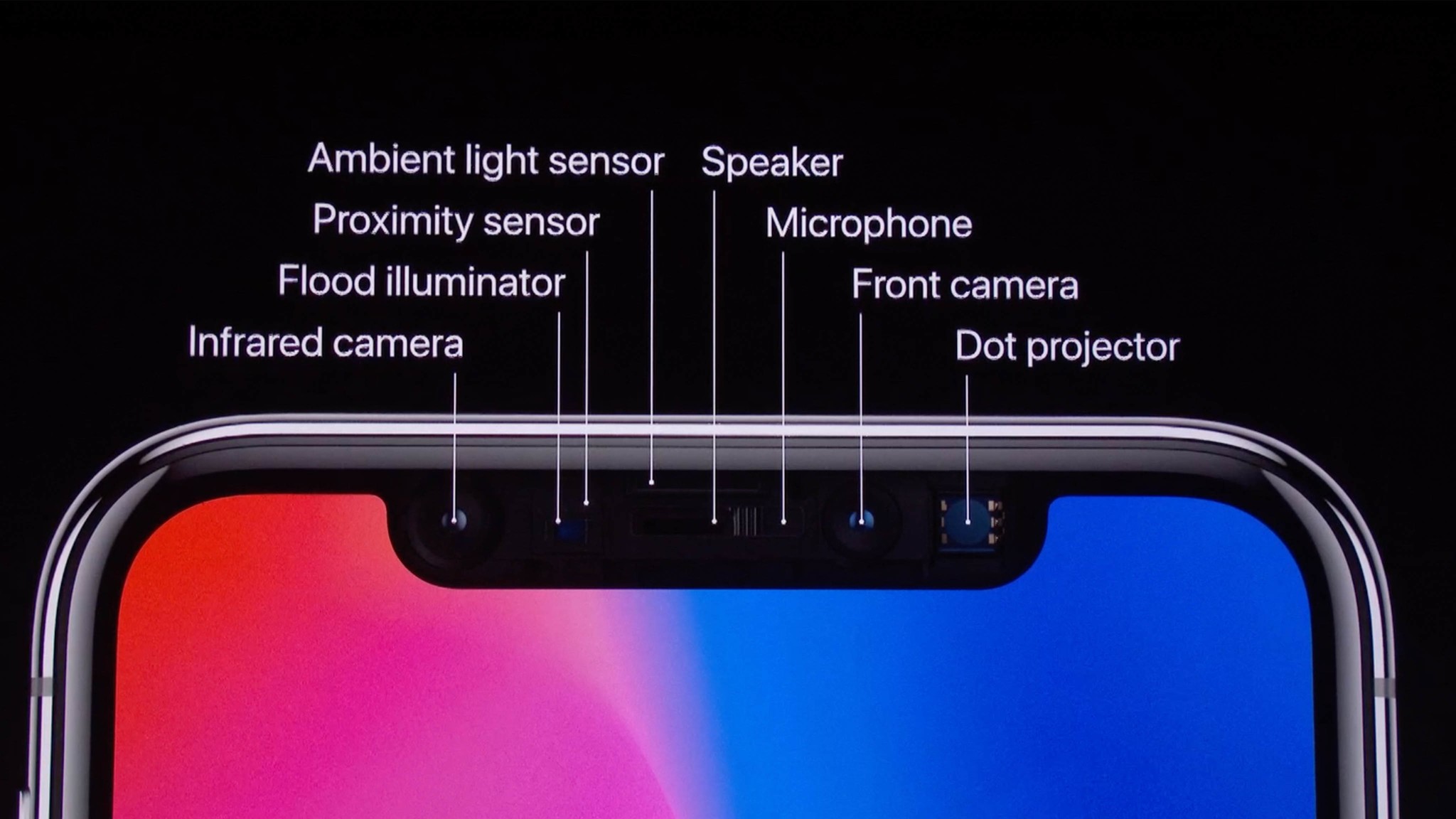

It has a flood illuminator and dot projector to both infrared light up and high contrast mark up your facial geometry, just like Face ID. But where Face ID has a single IR camera to read in that data, Google's Face unlock is going to have two — one on each side of the full-on top bezel forehead this expanded system s going to require to house it.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Which, by the way, I have absolutely no problem with. Notch, hole punch, forehead, mechanical choochers what raise and lower selfie cams — they're all stopgaps to me until we can get all those sensors under the display, and that's still some time away. Early attempts at it are doing way less and not doing it anywhere near well yet.

But, if you have a strong preference twixt one or tother, as Captain Malcolm Reynolds would say, let me know in the comments.

The facial geometry then gets converted to math and passed off to Google Titan Security chip for secure authentication.

Here's where things get fuzzy, though. Apple has something similar to Titan, a secure block integrated into their custom A-series processors, to handle storage of the data. But, Apple also has an integrated neural engine on A-series to both handle the matching and combat spoofing attempts.

Google's almost certainly going to lean on Qualcomm's silicon to do similar, and they can even rope in GPU if they have to, they just haven't outlined the process yet and I'm super curious to see what, if anything, they may be doing differently.

If you already know or have any good guesses, hit up those comments and share.

So, why two IR cameras?

Jerry Hildenbrand of Android Central speculates that it might be because the camera tech isn't yet as good as Apple's so two systems are being used to compensate, Google is doing a stereo instead of a mono reading of the face data, or that it might let the face unlock work at 180 degrees or off-axis, like when the phone is sitting on the table.

The 2018 iPad Pro can already do 180, hell 360 degrees off the one IR camera, though, so while Google might need two cameras for that, it's clear two cameras aren't absolutely required for it.

Also, there's no point reading off-axis if you can't illuminate and dot-project off it, so we'll have to wait and see about that part.

My completely uneducated guess is that it'll let you unlock at slightly wider angles while you're holding the phone, so it can start sooner and handle a greater range of positions than a single IR camera would allow. If not something as extreme as sitting on a table.

But, again, if you know better, comment, comment, comment.

Here's what Google had to say about it in their blog post:

Other phones require you to lift the device all the way up, pose in a certain way, wait for it to unlock, and then swipe to get to the homescreen.

"Other phones" here means the iPhone, which is weird to be cutesy about given how brazenly Google names it in their photography compares every year.

It reads weird in general, though. You also don't have to pose with Face ID, just look at it. And it doesn't require you to wait or to swipe. The unlock is really fast and the swipe is a conscious design choice.

Part of the attention system is that it allows you to do things like unfurling private messages at a glance, and if it just unlocked and went directly to the home screen, it would blow through those notifications before you had time to see them.

As a user, figuring out how to unlock without blowing past your notifications if that's all you wanted to see, would then become a hugely awkward and annoying task. And would totally negate the point of lock screen data in general, at least for the actual owner of the phone.

For people who don't want or care about lock screen data or notifications, though, I'm still a firm believer that Apple should add a toggle in settings for "Require Swipe to Unlock" so they can blast right through the lock screen and go straight to the home screen, if that's what they want to do.

If you want that too, do me a favor and let Apple know in the comments.

But, otherwise, to make it sound like a limitation of the technology is just disingenuous.

Project Soli

Pixel 4 does all of that in a much more streamlined way. As you reach for Pixel 4, Soli proactively turns on the face unlock sensors, recognizing that you may want to unlock your phone.

Soli is hella cool. It's pretty much radar in a tiny chip, using radio waves to detect and track movement — range, angle, and velocity in 3D space. It's like, I don't know, Daredevil in a chip.

If the face unlock sensors and algorithms recognize you, the phone will open as you pick it up, all in one motion.

So, what problem are they actually trying to solve here?

Face ID already works fine as you pick up the iPhone, all in one motion and everything. Because phones have had motion sensors like accelerometers in them for well over a decade.

The problem accelerometers run into is, yeah, you guessed it, when there's no acceleration. So, for example, if your phone is on a dock or mount or shelf or you're holding it up in front of you but you waited and let it go back to sleep for some reason.

Then you have to either pick it up, tap the display to wake it, or shake, shake, shake it back awake.

That's a super-specific niche, but if Soli could make it better, that'd be great. But Google specifically says Soli only triggers as you reach for your phone anyway, and most of the time, I mean product videos aside, you're going to want to be holding your phone anyway.

So, my guess is, Soli is just using all the radar sense to warm up the camera system as you reach for it, as opposed to when you start lifting it. At worst, when your face isn't in the field of view before you start lifting, it'll make no difference. At best, when your face gets into the field of view before you even pick it up, it'll be like Touch ID 2 over Touch ID 1, just that much faster that you can feel the difference.

Ironically, I'd probably want the notification unfurling and lock screen info faster, rather than the Home screen, because my eyes would be able to glance at and parse the visual data faster than my hands would be able to start tapping away.

But I won't know for sure until I actually use it. Yell at me in the comments if you think I'm missing anything wicked obvious there.

Better yet, face unlock works in almost any orientation—even if you're holding it upside down—

Again, much like last year's iPad Pro.

and you can use it for secure payments and app authentication too.

Much like 2017's iPhone X.

I don't mean to snark there. But Apple shipped the original Face ID almost two years ago, and it'll be pretty much exactly two years by the time the Pixel 4 ships, and year post the omni-directional iPad version.

So the whole thing is a bit of a weird flex on Google's part, given I'm sure some of us were hoping they'd be even further ahead by now and, instead of highlighting older Apple hardware and process features, they'd be talking up sizzling cool new Google machine learning algorithms that would, who knows, let us smirk or blink or twitch our noses to unlock into specific apps or something.

Or, even better, rather than using more sensors than Apple, require fewer, Sort of how they managed really good portrait mode with even fewer cameras. You know, to get Face ID without a notch, much less a forehead. Now that would be a flex worth, you know, flexing.

Google also pre-announced another feature for Soli — air gestures.

Which… I'm kinda super cautiously neutral-mistic about.

Basically, they let you hold out your fingers and hands and cast spells on your phone. Not Magic Missile or Avada Kadavra or anything like that. More like Imperius on the operating system.

We've seen far less refined versions before from other companies.

If you're not familiar with how capacitive touch screens work, they actually radiate out in three dimensions and can map things like fingers and hands in three D space.

Apple has used that since forever to figure out which fingers were being used for stuff like multi-finger gestures and palm or spurious input rejection.

Samsung, though, has played around with it for air gestures in the past.

The LG G8 shipped with a bunch of, I'm just going to come out and say it, pretty damn goofy air gestures this year as well.

So far it's been limited to things like, if your phone is in a dock, and your hands are covered in gravy or frosting, or you're just out of reach, you can swipe in the air to keep reading your recipe or change the volume or skip tracks or whatever.

Soli is far more fine-grained than that, and can detect things like you Mr. Miming to rotate the crown on a watch or type keys or strum notes on an instrument.

I'm super psyched about this technology, but currently the opposite way from how Google's using it — Pointing out, not in.

One day, when I'm wearing augmented reality glasses or lenses or implants, I'm going to want to navigate the AR and VR world entirely through highly refined, real-world emulating air gestures.

But stuff like this is what we need to get to stuff like that. So, at best this initial implementation is an instant revolution like multitouch and at worst just a gimmick for now, but either way we get to the future faster.

What comes next

It's going to be silly stupid fun watching people who hated the idea of Face ID on the iPhone suddenly fall in love with it on the Pixel, but so was watching them call the iPhone design boring but not the almost identical Pixel 1 design, Portrait Mode a gimmick until the Pixel 2 adopted it, and the notch on iPhone X super ugly until the Pixel 3 said hold my notch beer.

But it's just as much silly stupid fun watching Apple quote-un-quote invent features Android phones have been using for a year or two as well, and the vice being totally versa'd by people who hated Google or Samsung or Huawei or whomever for it but suddenly fall notch over lightening port in love with it on iPhone too.

I just want the future faster, so I want all of this stuff. But I also want to analyze the hell out of it with all of you, because that's where we live.

So hit like if you do, subscribe if you haven't already, and then hit up the comments and let me know what you think about Google's Pixel teaser. Shameless Face ID facsimile or next-generation sensor tech?

Thank you so much for watching and see you next video.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.