iPhone XX — Imaging the next 10 years of Apple

When Steve Jobs announced the original iPhone in 2007, the first big leap forward, it was made possible by the confluence of several rapidly emerging technologies — capacitive multitouch, ubiquitous mobile data networks, and miniaturized computing.

All of these technologies existed prior to the iPhone but they'd never been brought together before in anything as polished or approachable as the iPhone.

Evolution followed revolution, and over the course of the last decade, we got high-density displays, Siri, LTE and Lightning, custom 64-bit silicon and Touch ID, extensibility and continuity, Apple Watch and AirPods, computational photography and audio, inductive charging and shortcuts.

When Tim Cook announced the iPhone X in 2017, the second big leap forward, it packaged together new technologies like bendable OLED displays, depth and Face ID-sensing cameras, and neural engine blocks, but it also jettisoned the fundamental interactive element of the previous decade, the Home button, and replaced it with a new, more direct, gesture navigation system.

Extreme dynamic range and spatial audio and WiFi 6 and ultra-wide-band followed, but looking back is never as exhilarating as looking forward. And that's what I really want to talk about today. Not what was, but what will be. Not this year but for the next 10 years to come.

Sure, we have iPhone 12 coming up, and 5G, but beyond that, we have an even greater confluence of technologies, rapidly emerging, that when taken together that could make for an even bigger third leap forward. With iPhone XX.

No… seriously.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Wearables

At the beginning of the last decade, Steve Jobs took to the keynote stage one final time to announce iCloud and, with it, the end of the iTunes PC tether. It meant nothing less than freedom for the iPhone and iPad, the ability to own and use one even if you didn't have a PC. It took until iOS 5, but it happened.

In this decade, I think we'll see the same thing happen with the Apple Watch. Not the death of another physical tether but of a wireless one. The end of dependence on the iPhone and beginning of Apple Watch independence — the ability to own and use one even if you don't have an iPhone.

But more than that. They won't be able to do as much, just like the phone can't do as much as the PC, but they'll be able to do more and more without us having to reach for the phone or retreat to the PC.

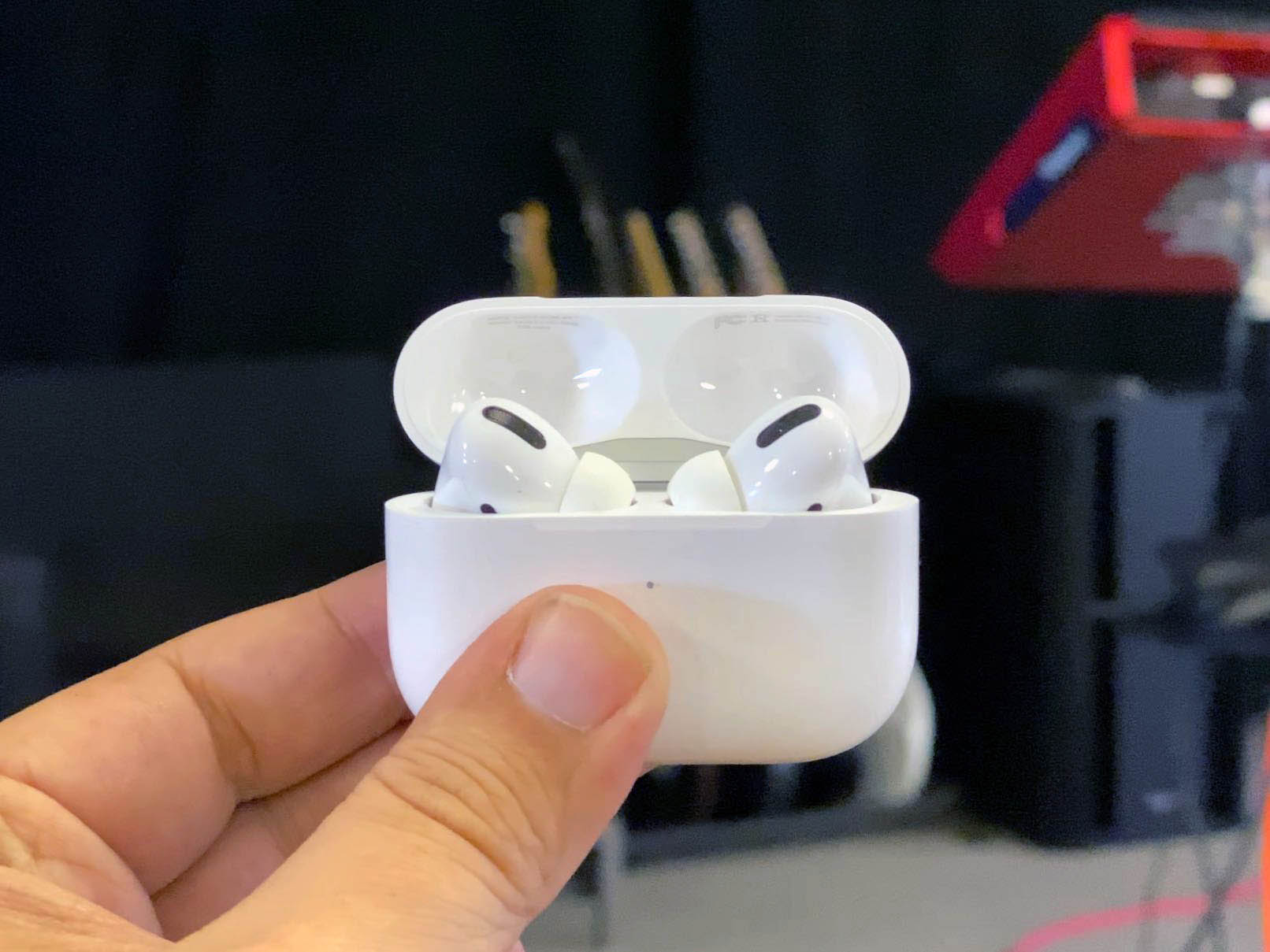

And, as the phone does more and more of what the PC used to do and the watch does more and more of what the phone used to do, so will the AirPods and similar earphones start doing more and more of what the watch used to do.

I've done a video explaining this all before, link in the description, but they'll eventually become independent as well, and able to stream music and podcasts and turn-by-turn directions never mind without being connected to a phone or watch, but without you having to own a phone or watch if you don't want to.

Foldables

I've said numerous times on this channel that the history of human technology is the history of foldables. Books, pamphlets, wallets, clothes, calzones, tacos… even laptops. We do it because doubling depth in exchange for halving width or hight has been a fair deal. A good one even, when the outside can better protect what's inside.

Displays are no different. Phones and tablets are getting bigger and bigger. Just over the last decade we went from 4.5 to 6.5-inch iPhones and 9.7-inch iPads all the way up to 12.9. And they just don't fit in some people's clutches anymore. Or front pockets. Or, hell, even skinny hipster back or shirt pockets.

Yes, the devices we're getting now aren't even beta, they're alpha. Never mind graduating, the technology is still in nursery school. And yes, I've also done a video on this, also linked in the description.

But time has a way of solving for terrible. And when it starts solving for foldables, I expect we'll have screens the same way we have everything else — like wallets, like books — able to fold up and fit pretty much everywhere we want them to while we're out doing pretty much everything we want to do without having to worry about them.

Projectables

While wearables and foldables got all the -ables attention over the last many and few years, projectables have begun coming into their own as well. Just like websites were broken down into web services that could be surfaced not only in browsers but in apps and other services, like the Twitter API, OAuth, YouTube streams, and the list is endless, so too has app interface been decoupled from logic and binary blobs broken down into far more portable functions.

It basically lets your stuff exist in… other stuff. WatchKit was an early if ultimately flawed example. CarPlay and Android Auto, AirPlay and ChromeCast far better ones. Continuity, AirDrop, Universal Clipboard, Hand-off, all of it.

It's the idea that not just the data but its state and even the functionality that goes with it is no longer bound to a single device but to an identity, with all sorts of security baked in, of course, that starts to make a lot of what comes next so very exciting.

Ambients

Siri was one of the last things Steve Jobs worked on. It was announced just a day before he passed away. Over the last decade we've seen the rise of Amazon's Alexa and Google Assistant as well, Microsoft's Cortana, Samsung's Bixby, and a range of other, more niche virtual assistants.

None of them are terrific yet. None of them are HAL or KITT from Knight Rider, or even Her, much less JARVIS or FRIDAY or anything dreamed up in Sci-Fi.

They're only just now groping their way out of the primordial ooze and towards true natural language voice interfaces. But they're groping.

And when everything starts to click, when they start to really work, it'll legitimately be the beginning of not just a voice-first input revolution, but an ambient computing one.

Screens will still be super useful to read things from non-linearly, rather than have to wait to hear it if we don't have to, but everything else will be as fast as we can think and say it.

And, I've done videos on this before, but say goodbye to debates about Touch ID vs. Face ID.

Because the phones in our pockets, watches on our wrists, pods in our ears, glasses in front of our eyes, will just constantly grab glimpses of our facial geometry, snippets of our voices, touches of our fingers, rhythms of our pulses, steps from our gait, and other biometric and behavioral facets and just know we're… us… and be unlocked and ready for action for as long as they're confident we remain us.

As 5G becomes ubiquitous and gives way to 6G, and maybe even 7G before the turn of the next decade, it'll all just be persistently connected as well. No more bars, just almost all the places, almost all the time.

iPhone XX (2027)

When all these technologies come together it's possible to imagine something for the next big thing in iPhone that… goes well beyond the current conception of the iPhone.

Instead of a slab of glass and steel you have… a marble or a fob. Maybe you wear it as a watch or maybe you just keep it in your pocket. And what it does is three simple things:

- Local authentication so, by constantly establishing a biometric and behavior threshold of trust, it can reasonable prove we are us, so…

- Cloud connection lets it access all of our data, at its current state, and within its current context, so…

- It can project it onto any display, opportunistically, and conform to whatever interface paradigm is appropriate.

That means, if you have AirPods in your ears, you can access and affect anything you have in the cloud by just saying what you want, including complex operations by just talking out the equivalent of workflows.

If you're wearing augmented reality glasses, you get the visual interface as well, for when showing is still much more efficient than telling.

And if you need more or different, you get it. A piece of glass and metal in your pocket or pack, small or large, folded or flat, becomes your computing environment. It just gets played to so you can use it like you do a phone or tablet or laptop today. An evolution of the Taptic Engine will let you feel like you're using any type of keyboard or nob or button or control surface you need.

Same with much bigger pieces of glass and metal on desks, yours and at co-working spaces or remote offices or hotels or conferences, and bigger ones still hanging or projected onto walls, pretty much anywhere.

But when you don't need it, it's just you. Maybe your glasses or just your pods. Pretty much the closest step we can get to external cybernetics… until they become internal.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.