Priti Patel says Apple should see through CSAM photo scanning measures

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

What you need to know

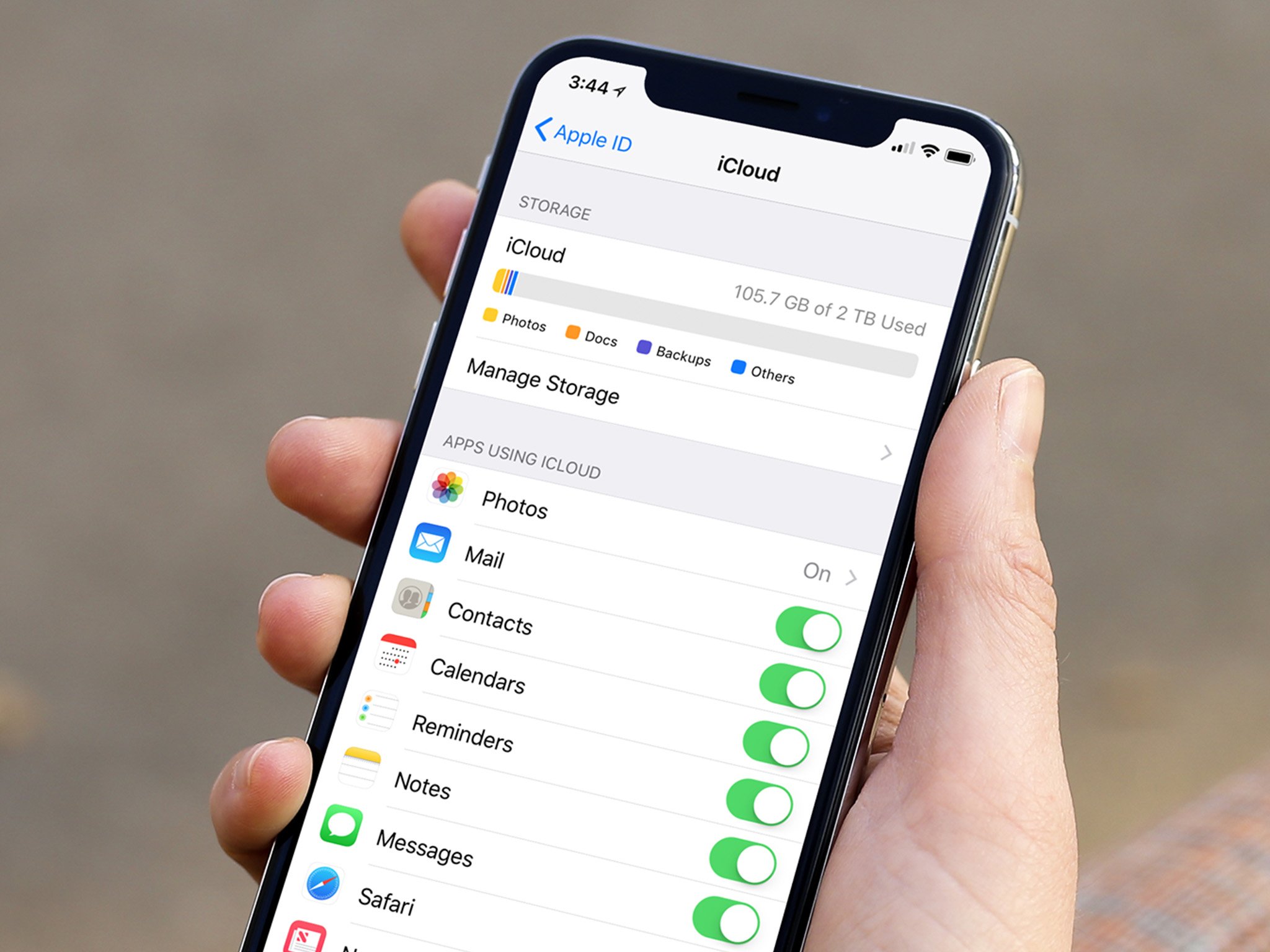

- Apple recently pumped the brakes on its Child Safety Measures.

- UK Home Secretary Priti Patel has called on the company to see out the project.

- It comes as the UK launches a new Safety Tech Challenge Fund.

UK Home Secretary Priti Patel has called on Apple to see through its Child Safety project, including a measure that would scan iCloud content for known Child Sexual Abuse Material.

It comes as the Home Office launched a new Safety Tech Challenge Fund. Patel stated:

It is utterly appalling to know that the sexual abuse of children is incited, organised, and celebrated online. Child abusers share photos and videos of their abhorrent crimes, as well as luring children they find online into sending indecent images of themselves.It is devastating for those it hurts and happens on a vast and growing scale. Last year, global technology companies identified and reported 21 million instances of child sexual abuse.

The Home Secretary says it is a misconception that abuse like this takes place in the "dark corners of the web", and said that end-to-end encryption presented a massive challenge for public safety:

End-to-end encrypted messaging presents a big challenge to public safety, and this is not just a matter for governments and law enforcement. Social media companies need to understand they share responsibility for keeping people safe. They cannot be passive or indifferent about what their products enable or how they might inadvertently blind themselves and law enforcement from protecting children with end-to-end encryption.

Patel also praised Apple's recent CSAM measures, which the company has put on hold following feedback from experts and privacy advocates. She called on the company "to see through that project", stating the tech's 1 in a trillion false positive rate meant "the privacy of legitimate users is protected whilst those building huge collections of extreme child sexual abuse material are caught out."

Apple's CSAM scanning measures have drawn criticism from some because it takes place on-device, which some people consider to be intrusive, as noted Apple has now put the plans on hold.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Stephen Warwick has written about Apple for five years at iMore and previously elsewhere. He covers all of iMore's latest breaking news regarding all of Apple's products and services, both hardware and software. Stephen has interviewed industry experts in a range of fields including finance, litigation, security, and more. He also specializes in curating and reviewing audio hardware and has experience beyond journalism in sound engineering, production, and design.

Before becoming a writer Stephen studied Ancient History at University and also worked at Apple for more than two years. Stephen is also a host on the iMore show, a weekly podcast recorded live that discusses the latest in breaking Apple news, as well as featuring fun trivia about all things Apple. Follow him on Twitter @stephenwarwick9