The contextual awakening: How sensors are making mobile truly brilliant

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

When Steve Jobs introduced the original iPhone in 2007 he spent some time talking about its sensors — the capacitive multitouch sensors in the screen that let you use your bioelectric finger as the best pointing device ever, the accelerometer that enabled the interface to rotate with the phone, the ambient light sensor that adjusted brightness to fit the environment, and the proximity sensor that turned off the screen and capacitance to save power and avoid accidental touch events when the phone was held up to a face. Over the course of the next year, Jobs also introduced Wi-Fi mapping and then GPS so the iPhone could chart its location, and later still, a magnometer and gyroscope so it could understand direction, angle, and rotation around gravity. From the very beginning, the iPhone was aware.

The awareness was bi-directional as well. As the iPhone connected to Wi-Fi networks, so could it be used to map more networks. As the iPhone bounced off cell-towers and satellites to learn its location, so could its location be learned and information derived from it, like traffic conditions. As devices got smarter, so too did the networks connecting them. It was one, and it was many.

Microphones had been part of mobile phones since their inception, transmitting and recording the sounds of the world around them. They improved with noise cancellation and beam-forming, but they came alive with Voice Control and Siri. An App Store app and service bought by Apple and integrated into the iPhone in 2011, it made the microphone smart. All of a sudden, the iPhone could not only listen, but understand. Based on something previously said, it could infer the context and carry that through the conversation. Rather than simply listen, it could react.

Google Now lacked Siri's charm but was also far more brash about its scope. Hooked into the calendar and web browser, email and location, and a bevy of internet information sources, it wouldn't wait for a request, it would push data when time or conditions made it relevant. Thanks to context and natural language coprocessors, it could listen constantly for queries and parse them locally for better speed and better battery life. For Apple, processing so much of our data on their servers and "always listening" to what we say no doubt set off numerous privacy alarms, but for Google and those willing to make that deal, it enabled an entirely new level of functionality. The phone could now be there, waiting not only for a touch, but for a word.

Apple introduced its own coprocessor in 2013 as well, the M7 motion chip. Not only would it allow the existing sensors to persist, recording motion data even while the phone's main processor slept in a low-power state, but through persistence it would enable new features. Pedometer apps, for example, could start off with a week of historical data, and no longer had to rely on external hardware for background monitoring. Moreover, the system could realize when a person switched from driving to walking and record the location where they parked, making the car easier to find later, or realize when a person fell asleep and reduce network activity to preserve power. It could also pause or send alerts based not only on rigid times but on activity, for example, telling us to get up if we'd been stationary too long. It meant the phone not only knew where and how it was, but what was happening to it.

Cameras like iSight have been slowly evolving as well. Originally they could simply see and record images and video. Eventually, however, they could focus on their own and automatically adjust for balance and white level. Then they could start making out faces. They could tell the humans from the backgrounds and make sure we got the focus. Later, thanks to Apple taking ownership of their own chipsets, image signal processors (ISP) could not only better balance, expose, and focus on images, but could detect multiple faces, merge multiple images to provide higher dynamic range (HDR), dynamic exposure, and eliminate instability and motion blur in both the capture and the scene. The software allowed the hardware to do far more than optics alone could account for. Moreover, the camera gained the ability to scan products and check us out at Apple Stores, to overlay augmented reality to tell us about the world we were seeing.

Microsoft, for their part, is already on their second generation visual sensor, the Kinect. They're using it not only to read a person's movement around them, but to identify people, to try and read their emotional state and some amount of their biometrics. Google has experimented with facial recognition-based device unlock in the past, and Samsung with things like pausing video and scrolling listviews based on eye tracking.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Apple has now bought PrimeSense, the company behind the Xbox 360's original Kinect sensor, though their plans for the technology have not yet been revealed. The idea of "always watching" is as controversial, if not more so, than "always listening" and comes with the same type of privacy concerns. But what Siri did for the iPhones "ears", these kinds of technology could do for its "eyes", giving them a level of understanding that enables even better photography, security, and more.

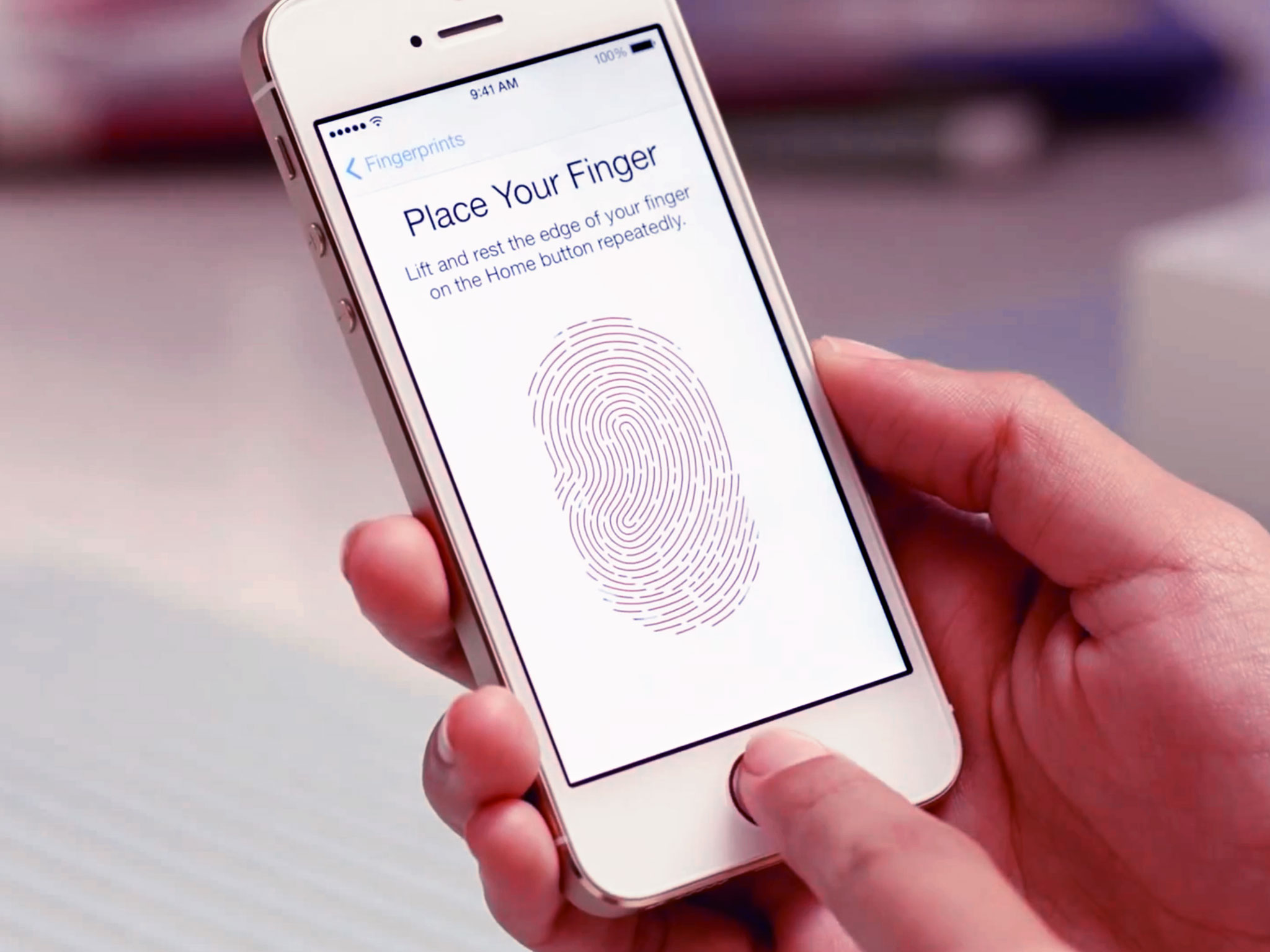

Touch ID, Apple's fingerprint identity system, is already doing that. It's taken the Home button from a dumb switch to a smart sensor. Instead of verifying people based on a Passcode they know, it identifies people based on who we are. Apple has hired other biometric sensor experts as well, though they haven't yet announced just what exactly they're working on. Yet the idea of devices even more personal than phones, wearable, capable of tracking not only fitness but health, is compelling. Pair them with the concept of Trusted Bluetooth — something you have — verify identity, and one day what knows people on a biological level could be used to unlock the technological world around them.

That's the next great frontier. Phones now know and understand more than ever about their own place in the world, and their owners', but the world itself remains largely empty and unknowable. iBeacons, also introduced in 2013, could help change that. While satellites orbit the earth, cell towers dot the landscape, and Wi-Fi routers speckle homes, and schools, and business, iBeacons are meant to fill in all the spaces in between, and to provide information beyond just location. Connected via Bluetooth 4.0 Low Energy, it could eventually guide navigation everywhere from inside stores, schools, and buildings, to vast wilderness expanses. iBeacons promise a network as rich as the world around it.

Thanks to the "internet of things" where every device with a radio can also be an iBeacon - including our phones and wearables - and can also sense and transmit it's understanding and capabilities, everything could eventually tie together. Nest already makes connected thermostats and smoke detectors. Nexia already makes connected door locks and security systems. Almost all car companies offer connected automotive options. Eventually, everything that controls environment or will understand that environment and be able to hand over that understanding and control. Apple doesn't need to make most or any of this stuff, it just needs to be the most human, most delightful way of connecting it all together.

CarPlay is an example. The opposite of a wearable is a projectable. Apple did that early on with AirPlay and the Apple TV. They don't have to make a television, they can simply take over the screen. They don't have to make a car, they can simply take of the infotainment system. How many screens will one day be in our lives? Imagine iOS understanding most or all of them and presenting an Apple-class interface, suitable to the context, that's updated whenever iOS is updated and gets more powerful and capable whenever iOS devices get refreshed. It might take the development of dynamic affordance and push interface and other, more malleable concepts, but one day the phone in our pocket, the device we already know how to use and that already knows us, could simply exist on everything we need to interact with, consistent and compelling.

It won't be The Terminator or the Matrix. These won't be AI out to destroy us. This will be Star Trek or JARVIS from Iron Man, This will be devices that are capable only of helping us.

Traffic will take a turn for the worse. We'll glance at our wrist, noting we need to leave for our appointment a few minutes earlier. The heat at home will lower. Our car will start. It'll be brand new, but since our environment is in the cloud and our interface projects from the phone, we'll barely notice the seats moving and heating, and the display re-arranging as we get in. The podcast we were listening to in the living room will transfer to the car stereo even as the map appears on screen to show us the way. The garage door will open. We'll hit the road. The same delay that made us leave early means we'll be late for our next meeting. Our schedule will flow and change. Notifications will be sent out to whomever and whatever needs them.

We'll arrive at the building and the gate will detect us, know our appointment, and open. We'll be guided to the next available visitor's parking spot. We'll smile and be recognized and let in, and unobtrusively led to exactly the right office inside. We'll shake hands as the coffee press comes back up, our preference known to the app in our pocket and our beverage steaming and ready. We'll sit down, the phone in our pocket knowing it's us, telling the tablet in front of us to unlock, allowing it to access our preferences from the cloud, to recreate our working environment.

Meeting done, we'll chit chat and exchange video recommendations, our homes downloading them even as we express our interest. We'll say goodbye even as our car in the parking lot, turns on and begins warming up, podcast ready to resume just as soon as we're guided back to it, and into listening range. On the way down, we'll glance at our wrist again, note we need to eat something sweet to keep our energy in balance. A vending machine on the way will be pointed out, the phone in our pocket will authorize a virtual transaction. A power bar will be extended out towards us. We'll grab it, hurry down, and be on our way to work even as our 4K displays in the office power up, the nightly builds begin to populate the screen, and the tea machine starts brewing a cup, just-in-time, ready and waiting for us...

Right now our phones, tablets, and other mobile devices are still struggling as they awaken and drag themselves towards awareness. It's a slow process, partially because we're pushing the limits of technology, but also because we're pushing the limits of comfort as well. Security will be important, privacy will be important, humanity will be important. If Apple has had one single, relentless purpose over the years, however, it's been to make technology ever more personal, ever more accessible, and ever more human. Keyboards and mice and multitouch displays are the most visible examples of that purpose. Yet all of those require us to do — to go to the machine and push it around. Parallel to input method there's been a second, quieter revolution going on, one that feels and hears and sees and ultimately not only senses the world and network around it, but is sensed by it. It's a future that will not only follow us, but, in its own way, understand.

Update: CarPlay, just announced, has been added to this article.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.