Person-centric computing: The future beyond iOS and OS X

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

This is the dream: You pick up a phone or a tablet, you sit down to a laptop or desktop, you walk up to a display of any kind, and all of your stuff is just there, ready and waiting for you to use or enjoy. It's the future of decoupled computing where intelligence is independent of environment, and where the device-centric world gives way to the person-centric experience. It's a future where iOS or OS X, cloud or client are abstract terms no mainstream human ever has to deal with or worry about. It's getting a lot of attention lately thanks to comments on convergence from Apple executives in an interview with Jason Snell on Macworld and the catchy "iAnywhere" label of a couple of analysts. What's more interesting to me is not so much the idea — computing as we know it will obviously continue to evolve — but the implementation. How could Apple make the person-centric experience a reality?

Here's what the analysts said about iAnywhere:

This "next big thing" for Apple would essentially be a "converged platform" featuring the company's Mac OS and iOS operating systems in which an iPhone or an iPad would be able to "dock into a specially configured display to run as a computer."

It's far from an original idea, of course. Apple itself filed patents for roughly similar technology — a portable docking to make a desktop — going back at least as far as 2008:

A docking station is disclosed. The docking station includes a display and a housing configured to hold the display in a manner that exposes a viewing surface of the display to view. The housing defines a docking area configured to receive a portable computer; The docking area is at least partly obscured by the display when viewed from the viewing surface side of the display at an angle substantially orthogonal to the viewing surface.

OS X merging with iOS has likewise been a popular discussion topic since OS X Lion brought iPad ideas "back to the Mac". It made sense at the most superficial design and naming level for comfort and consistency, but never at the deeper levels, and certainly not for anything approaching convergence. It's the "toaster-fridge" Tim Cook made fun of. Or, as Apple's SVP of software, Craigh Federighi said to Snell:

"The reason OS X has a different interface than iOS isn't because one came after the other or because this one's old and this one's new," Federighi said. Instead, it's because using a mouse and keyboard just isn't the same as tapping with your finger. "This device," Federighi said, pointing at a MacBook Air screen, "has been honed over 30 years to be optimal" for keyboards and mice. Schiller and Federighi both made clear that Apple believes that competitors who try to attach a touchscreen to a PC or a clamshell keyboard onto a tablet are barking up the wrong tree."It's obvious and easy enough to slap a touchscreen on a piece of hardware, but is that a good experience?" Federighi said. "We believe, no." [...] "To say [OS X and iOS] should be the same, independent of their purpose? Let's just converge, for the sake of convergence? [It's] absolutely a nongoal."

The important thing here is to remember that the Mac didn't always run OS X, and in another 30 years — hell, another 10 or even less — it might not run OS X anymore either, nor might iOS as it currently exists be found on any hardware. That's why what's really being said by Federighi is that traditional WIMP (windows, mouse, pointer) interface shouldn't be jumbled together with multitouch. They're different paradigms for different hardware.

What's not being said is whether one will eventually supersede the other, the way punch-cards and, to a large extent, command lines, have been superseded by the GUI and multitouch, or whether they'll all be replaced by something else entirely, like natural language or the cloud. Examples of all of them have either already been tried, are in progress, or have been talked about for years.

Layering interfaces together

Microsoft tried to layer multitouch and desktop interfaces into Windows 8. It was pitched as "no compromises" but has generally been rejected by the market as the ultimate in compromise. Instead of the best of both it ended up the worst of both. It may not have been obvious from the start. Many people still ask for touch screen Macs or iOS X on large-size tablets, for example. Hell, I used to think I wanted an iOS layer on top of OS X, the way Front Row or Dashboard works, or even LaunchPad. Not any more, and it's likely no coincidence that Front Row is now gone, Dashboard is basically abandonware, and only LaunchPad remains. That's because it was obvious to Apple, and hopefully to everyone in hindsight — as much as the medium is the message, the interface is the experience. Bifurcate one and you bifurcate them both.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Although multitouch and mobile haven't layered well together, natural language is currently working in just exactly that way. Siri sits "on top" of iOS and Google Now sits "next to" Android. Both are only a press or a swipe or an utterance away. That makes a lot more sense. Neither can replace the multitouch interface right now, but both can enhance the experience.

Taking it to the cloud

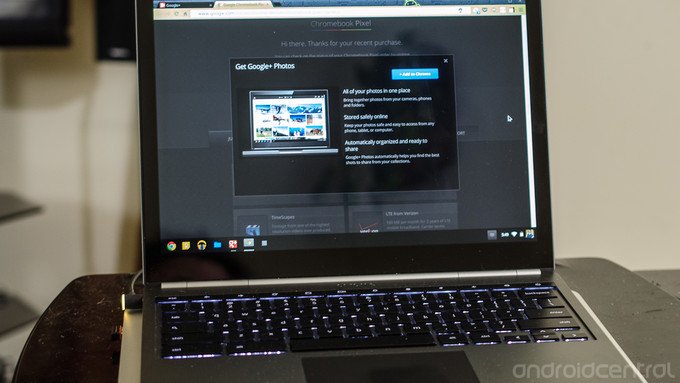

Google's ChromeOS is the best real-world implementation of the thin-client model we've seen so far. All your computing is all in the cloud. You log into a device, conceivably any device, and it becomes yours. Google's only doing it on laptops and desktops for now, but it's not hard to imagine tablets and even phones and wearables can and will follow. I don't think the Google Now card interface was chosen accidentally or haphazardly, nor do I think Google Services have been abstracted from Android's core solely to shape the current market.

Yet the pure cloud approach has many limitations as well. Connectivity remains an issue, as does the limitations on software power and performance. Concerns about privacy and security remain as well. For many people, for many activities, however, convenience could well win out.

Apple's iCloud, by contrast, stores and syncs account information between both iOS and OS X, but only backs up iOS settings and data, and needs apps and media to be re-downloaded to local devices. It's a cloud solution from a device company, and while it can and will improve, the idea of everything in the cloud may not fit Apple's vision for computing.

Making the brain mobile

Last April former BlackBerry CEO Thorsten Heins caused some controversy by saying tablets weren't a long term business. He was ridiculed because, well, iPad. His vision, however, was the internet of things, where a phone could be the brain that powers multiple different computing end points, including tablets and traditional computing displays (with mouse and keyboard). Here's what I wrote about the idea back then:

The futurist in me wants to take that a step further, to where the computing is decoupled from device, and the "brains" are a constant thing we always have with us, hooked in everywhere, capable of being expressed as a phone or tablet or laptop or desktop or holodeck for that matter. All my stuff, existing everywhere, accessible everywhere, through any hardware interface available.

It's not dissimilar to the vision of computing shown off over a decade ago by Bill Gates during one of his impossibly forward-thinking CES keynotes. Unfortunately, just like with tablets, Microsoft couldn't get past their Windows-everywhere, PC-centric point of view, and so a decade later they're no further ahead than anyone else when it comes to actually delivering it.

What's interesting about the device-as-the-brain, however, at least compared to cloud-as-the-brain, is that it's not entirely reliant on someone else's server. Because it doesn't have to depend on the internet, it can sit in your pocket and make its own ad-hoc, direct networking connections as well.

Palm's Folio laptop companion died on the vine, and the original purpose of the BlackBerry PlayBook was never allowed to be. Both of those things were for good reason.They simply weren't the right implementations and they absolutely weren't at the right times.

The sum of the parts

What's also possible, perhaps even more likely, is some combination of all of the above. A device with both multitouch and natural language and sensor-driven interface layers, that connect to the cloud for information and backup, but also serves as the central point for identity and authentication.

I've mentioned this kind of person-centric future briefly in a previous article, The contextual awakening: How sensors are making mobile truly brilliant:

We'll sit down, the phone in our pocket knowing it's us, telling the tablet in front of us to unlock, allowing it to access our preferences from the cloud, to recreate our working environment.

That, I think, is key to this working out for me. Rather than docking, projecting device trust and even interface is more forward-thinking. Understanding the context of not only us, but the screens around us, simply makes the most sense. Rather than old-style UNIX user accounts on iPads, for example, Touch ID, iCloud, and device trust could do the same thing. Projecting from phone to wearable or phone to tablet or computer could do even more.

Some of the technology for this already looks to be underway. From last year on the concept of "iOS everywhere" branching off from iOS in the Car:

Traditionally Apple hasn't done as well when they have to depend on other companies, but the potential of iOS in the Car seems to go further than just the car. Indeed, it could provide our first hints of iOS everywhere, and that's incredibly exciting for 2014, and beyond. [...] And, of course, seeing Apple project iOS interface beyond just TV sets and Cars, but onto all manner of devices would be fantastic as well. Apple doesn't make the range of products a Samsung or LG make, nor do they have any interest in licensing their operating systems the way Microsoft, BlackBerry, and Google do. However, taking over screens neatly sidesteps both those issues, and keeps Apple in control of the experience, which they're fond of. So we'll see.

Imagine it: You walk into your house with your iPhone, your lights come on and so does your TV and home theater, exactly where you left off. You iPad and/or your iMac also turn on, your current activity locked and loaded. And all of it, not because of a fiddly dock or inconvenient login, but because they know you, they know your stuff, and they know what you want to do with them next.

Beyond iOS or OS X, beyond "iAnywhere", that's the dream many of us have been waiting for.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.