Apple explains why your iPhone 15 Pro's 24-megapixel photos are better than 48-megapixel shots

Just leave the settings alone.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

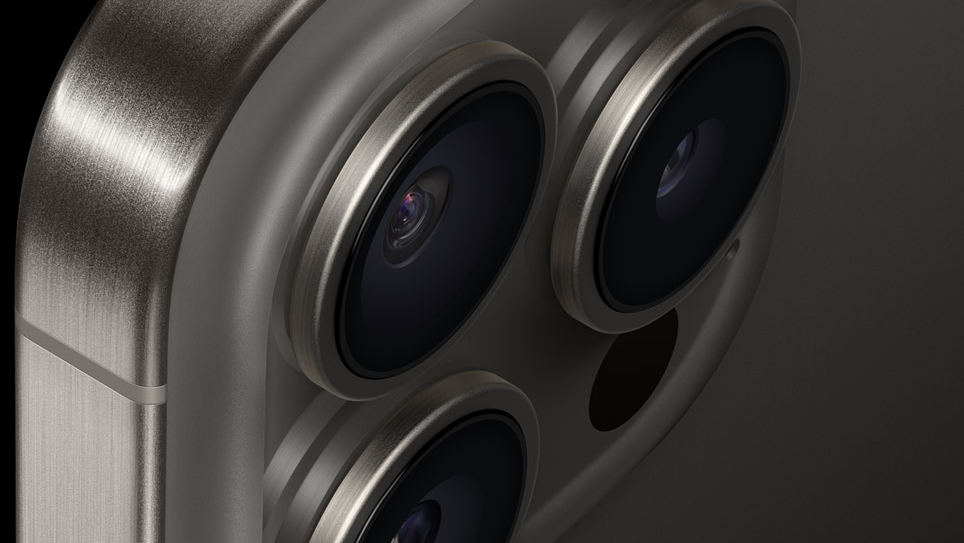

When the iPhone 15 Pro and iPhone 15 Pro Max both go on sale on September 22 they'll come with 48-megapixel cameras that take 24-megapixel photos. Apple detailed why that is in its September 12 unveiling, and now it's sought to clear things up further.

As is always the case with the new iPhones this time around, photography is a big focus. That of course comes in part thanks to the 5x zoom camera on the Pro Max, but there are upgrades to the main 48-megapixel camera, too. And that's where Apple has been facing a question — why take 24-megapixel photos with a 48-megapixel camera?

The reason, Apple says, is because it can take better photos at that resolution all while making full use of all the pixels afforded by that 48-megapixel sensor.

Cameras in focus

It's fair to say that people who buy the best iPhones might be tempted to switch away from 24-megapixel shots to save 48-megapixel files. But in a new interview with camera website PetaPixel, Jon McCormack, Vice President of Camera Software Engineering at Apple, says that would be a mistake.

“You get a little bit more dynamic range in the 24-megapixel photos,” McCormack explained in the interview. “Because when shooting at 24-megapixels, we shoot 12 high and 12 low — we actually shoot multiple of those — and we pick and then merge. There is, basically, a bigger bracket between the 12 high and the 12 low. Then, the 48 is an ‘extended dynamic range,’ versus ‘high dynamic range,’ which basically just limits the amount of processing. Because just in the little bit of processing time available [in the 24 megapixel] we can get a bit more dynamic range into Deep Fusion. So what you end up with in the 24, it’s a bit of a ‘Goldilocks moment’ of you get all of the extra dynamic range that comes from the 12 and the detail transfer that comes in from the 48.”

To put that all into a shorter sentence, Apple's camera magic takes image data from multiple 24-megapixel shots and combines that with the raw data from the 48-megapixel sensor to create a better image than if it just spat out a full-resolution shot instead.

McCormack also notes that photographers will enjoy zero shutter lag when shooting 24-megapixel shots, something that wouldn't be possible with 48-megapixel captures.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Oliver Haslam has written about Apple and the wider technology business for more than a decade with bylines on How-To Geek, PC Mag, iDownloadBlog, and many more. He has also been published in print for Macworld, including cover stories. At iMore, Oliver is involved in daily news coverage and, not being short of opinions, has been known to 'explain' those thoughts in more detail, too.

Having grown up using PCs and spending far too much money on graphics card and flashy RAM, Oliver switched to the Mac with a G5 iMac and hasn't looked back. Since then he's seen the growth of the smartphone world, backed by iPhone, and new product categories come and go. Current expertise includes iOS, macOS, streaming services, and pretty much anything that has a battery or plugs into a wall. Oliver also covers mobile gaming for iMore, with Apple Arcade a particular focus. He's been gaming since the Atari 2600 days and still struggles to comprehend the fact he can play console quality titles on his pocket computer.