Will Apple Vision Pro change the way we work with projects in 3D?

Opinion: Vision Pro and 3D — a match made in spatial heaven?

Apple Vision Pro is finally here. Apple’s shiny new spatial computing wearable that’s looking to change the way we live and work is out in the wild, strapped to heads crossing busy streets (or behind the wheel) and leaving us talking to ourselves on our sofas. Will Apple Vision Pro change the way we live? Maybe. What about the way we work? Well, for this 3D printing nerd, that’s certainly an interesting possibility.

Whenever a potentially disruptive product comes to market, there’s the looming question of ‘who is it for?’ With a retail price of $3,499, the Vision Pro isn’t exactly accessible to the average consumer but Apple has done a good job, so far, of marketing its device towards professional users, with demos showing fingers pointing at floating apps in virtual workspaces. Chief among those initial features is the ability to bring 3D objects to life.

Apple says Vision Pro lets wearers "pull a 3D object out of an app and look at it from every angle as if it’s right in front of you." You can take a 3D file straight from Messages, created using either 3D software or 3D scanning in USDA, USDC, and USDZ file formats, and render it as an AR object, opening up possibilities to not only digitize content but bridge the gap between 3D design and physical products.

Can you prototype with Apple Vision Pro?

Apple Vision Pro presents some compelling 3D scanning and digital twin capabilities. The Vision Pro’s 12 cameras, six external, which are designed to track hand gestures and capture spatial 3D photos and videos (a capability that’s also been added to the iPhone 15 Pro) could be advantageous to design and manufacturing processes, particularly 3D printing workflows.

I asked Kadine James, Chief Metaverse Officer at Artificial Rome, a Berlin-based studio focusing on immersive experiences, and a former 3D tech lead at London print bureau Hobs 3D, for her thoughts on where the two might meet: “Creatives can utilize the precise spatial mapping capabilities of the Apple Vision Pro to capture detailed 3D scans of physical objects," James explains. "These scans can then serve as the foundation for creating intricate and accurate 3D models. Once the digital models are refined, they can seamlessly integrate with 3D printing workflows. This synergy allows for the production of highly customized, complex, and finely detailed physical prototypes.”

James suggests that the real-time spatial awareness provided by Vision Pro could potentially enhance the accuracy of the 3D printing process, ensuring that the physical output faithfully represents the digital design.

Prototyping is still the biggest application of 3D printing technologies today (there are no signs of 3D printing at the production level on the Vision Pro but you can almost guarantee it was used as a prototyping device), but future apps developed within the visionOS framework might make it so you can almost prototype before you prototype, with what James describes as "augmented reality-enhanced prototypes, where digital information is overlaid onto the physical models during the design and testing phases.”

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Designers and manufacturers could, theoretically, tweak designs, place them into their end-use environments, and essentially save time and waste materials on producing a physical product.

Using Vision Pro in the real world

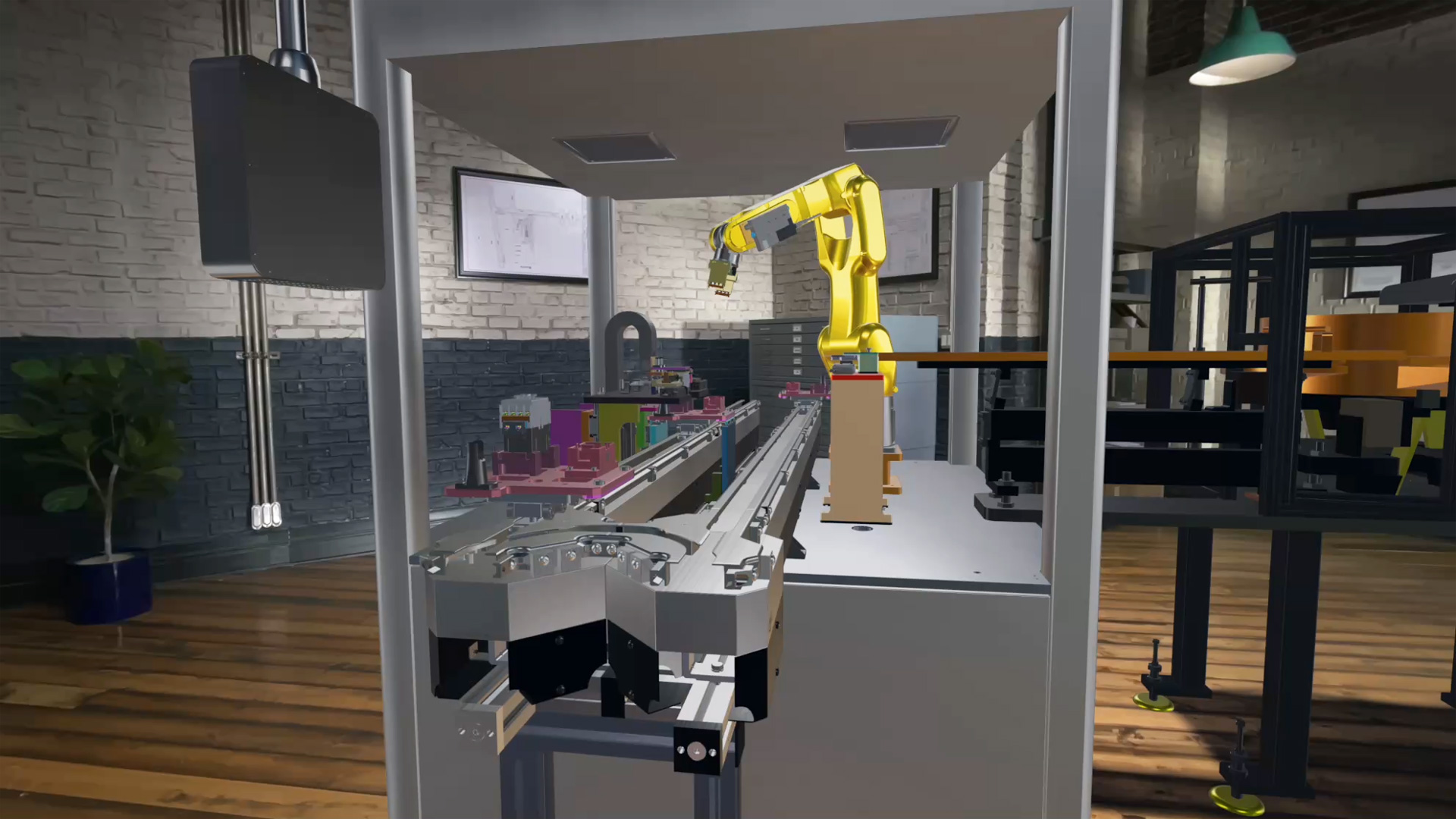

Current Vision Pro demos show users taking virtual house tours, or even turning their living spaces into mountain tops, but imagine, as a designer, being able to step inside a jet engine or a production line. One of the biggest challenges in 3D printing today is the ability to identify valuable end-use applications. If users were to be able to see inside an exploded 3D version of a machine or assembly, and then handle and experiment with parts in a virtual environment, perhaps more applications could be unlocked.

In June 2023, Apple shared how its visionOS software development kit is enabling developers to create new spatial experiences. Software company PTC, for example, has created a space that allows engineers to interact with production lines to make design and operation decisions. If more of these spaces are created, spatial computing and 3D technologies stand to complement each other nicely. Apple has also shared how Elsevier Health’s Complete HeartX app is designed to prepare medical students with hyper-realistic 3D models and could present a valuable bridge to preparing for clinical practice with tangible 3D objects that better prepare healthcare professionals for real-life surgeries.

Much like 3D printing, VR/AR tools have faced similarly steep hype curves (RIP will.i.am’s Coca-Cola desktop 3D printer). For any technology in its infancy, the challenge is often a lack of applications. At launch, more than 600 native apps were made available for the Vision Pro. More will of course come but the current market size of the Vision Pro could result in a lack of enthusiasm from developers. As more users get their hands on headsets, that will evolve, but for now, the professional market, particularly 3D manufacturing, could be a good place for developers to turn their focus.