iPhone 15 Pro Max zoom camera is a big win for accessibility — here are three ways how

A big win for accessibility, thanks to 5x zoom.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

You are now subscribed

Your newsletter sign-up was successful

Apple launched its iPhone 15 series on September 22, and one of the big highlights was a new lens in the 15 Pro Max.

I’ve owned one since launch day, and it’s fantastic. Great battery, a stunning display and a fast A17 Pro chip have proved impressive, and the Action Button has been really useful for quickly launching some Shortcuts. But it's the camera that’s the biggest improvement here. With its 5x zoom lens, I’ve been using it a bunch for taking photos of distant wildlife when walking my dog.

But Apple’s recent Accessibility improvements have given me cause to think about how others use these devices with audio and visual impairments. Apple has made strides to make sure accessibility is not an afterthought in its products, and so it should. Accessibility allows many users to use their iPhones in the same way that anyone else can, without feeling left out, but making them feel empowered instead.

If you browse through TikTok and Instagram, you’ll most likely stumble into a ‘Here’s this hidden hack you’ve always had on your iPhone’ video. I cringe at these because they’re not hidden, they’re an accessibility feature. Whether it’s the Live Listen feature on AirPods or Assistive Touch, these are meant to help those who need to use their device in certain ways.

After trying out these improvements to some Accessibility features on my iPhone 15 Pro Max, here are three big features that will take advantage of the new cameras.

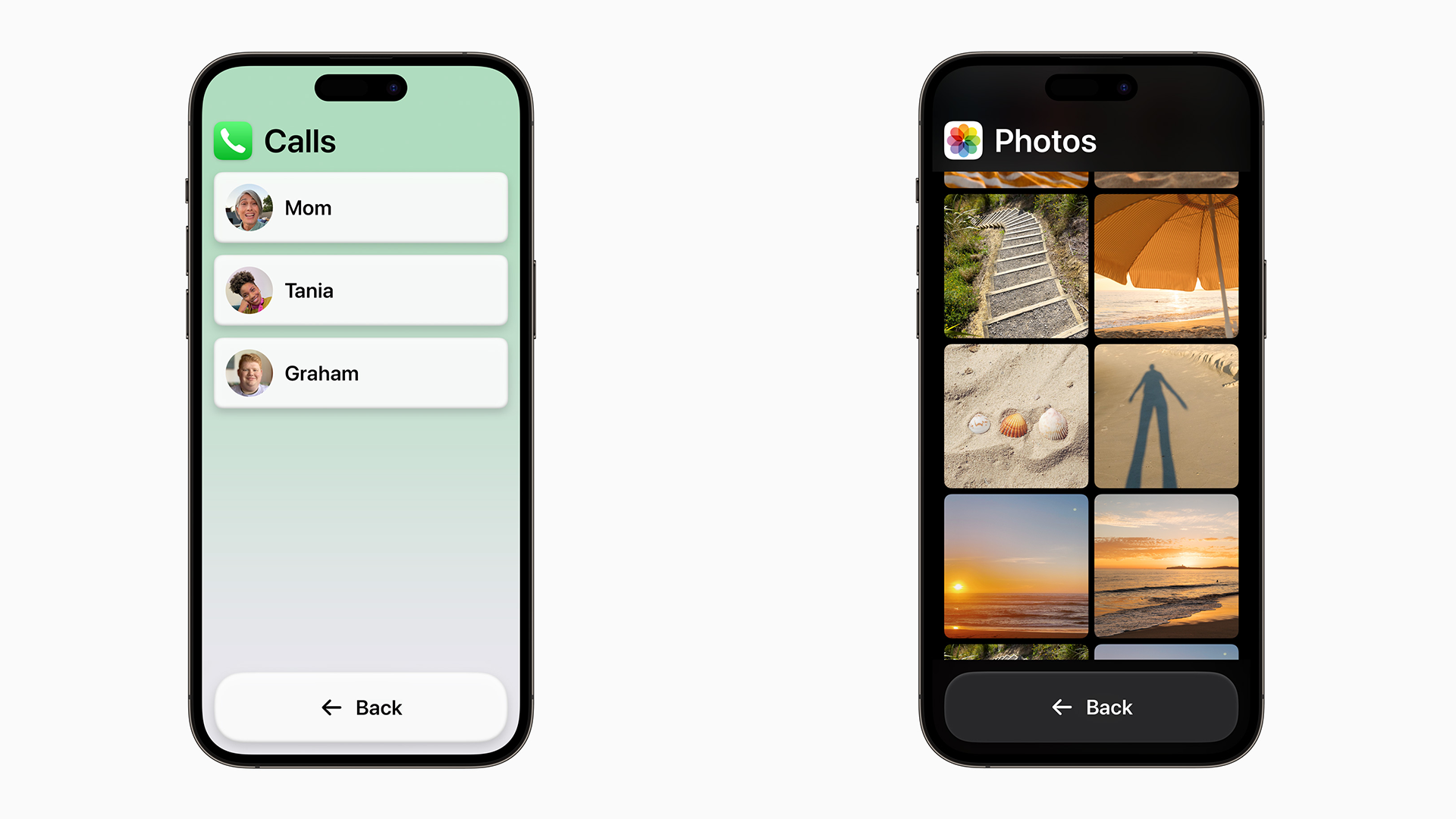

Assistive Access

This is the biggest feature to come out of Accessibility improvements in iOS 17. This transforms your iPhone’s home screen to show big, easy-to-use icons, which can help someone with visual needs in a big way.

Apple has also updated some of its apps to work with Assistive Access, and this includes the Camera. When you launch it, you’re brought to a list of modes you can select. From Photo to Video, Selfie, and Video Selfie. After this, a different interface appears that includes just one button to do the desired action.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Granted, this is available on every iPhone that can run iOS 17. But if you’re visually impaired and you’ve been wanting to upgrade to the newest and best iPhone, this is where you’re going to reap the benefits of this new feature.

It’s very easy to use, and with the new cameras in the iPhone 15 Pro Max, the app can take advantage of the improved lenses that can help make your photos and videos shine.

Detection Mode in Magnifier

The Magnifier app first appeared in iOS 11, where you can zoom in on different objects using your iPhone's camera. But it’s only in recent years that it’s seen some new features.

For those unaware, you can launch it from Control Center by going to Settings > Control Center and adding it to the list. Magnifier looks similar to the Camera app, but there are toggles to easily switch on the LED light of the back camera for instance. You can select a camera filter, or use the slider to zoom in on subjects.

Detection mode can be found in Magnifier, which first appeared in iOS 15, and only works with iPhone Pro models that have the LiDAR sensor. Once enabled, there are three features you can use, with the first called Door Detection. You can point it at a door, and it will tell you how far away it is so you’re ready to reach out and open it. There’s also People Detection which works the same way, but for people. Finally, Image Descriptions can describe objects in front of the camera, wherever you point your iPhone at.

All of these could benefit from the new camera in the iPhone 15 Pro Max. The 5x zoom and the ability to select different lenses could help you detect doors and objects from a further distance. This could work well if you don’t have perfect eyesight. So you could be in a busy area and you’re in a rush, needing to make sure that you’re close to the door at an office or a shopping mall for instance.

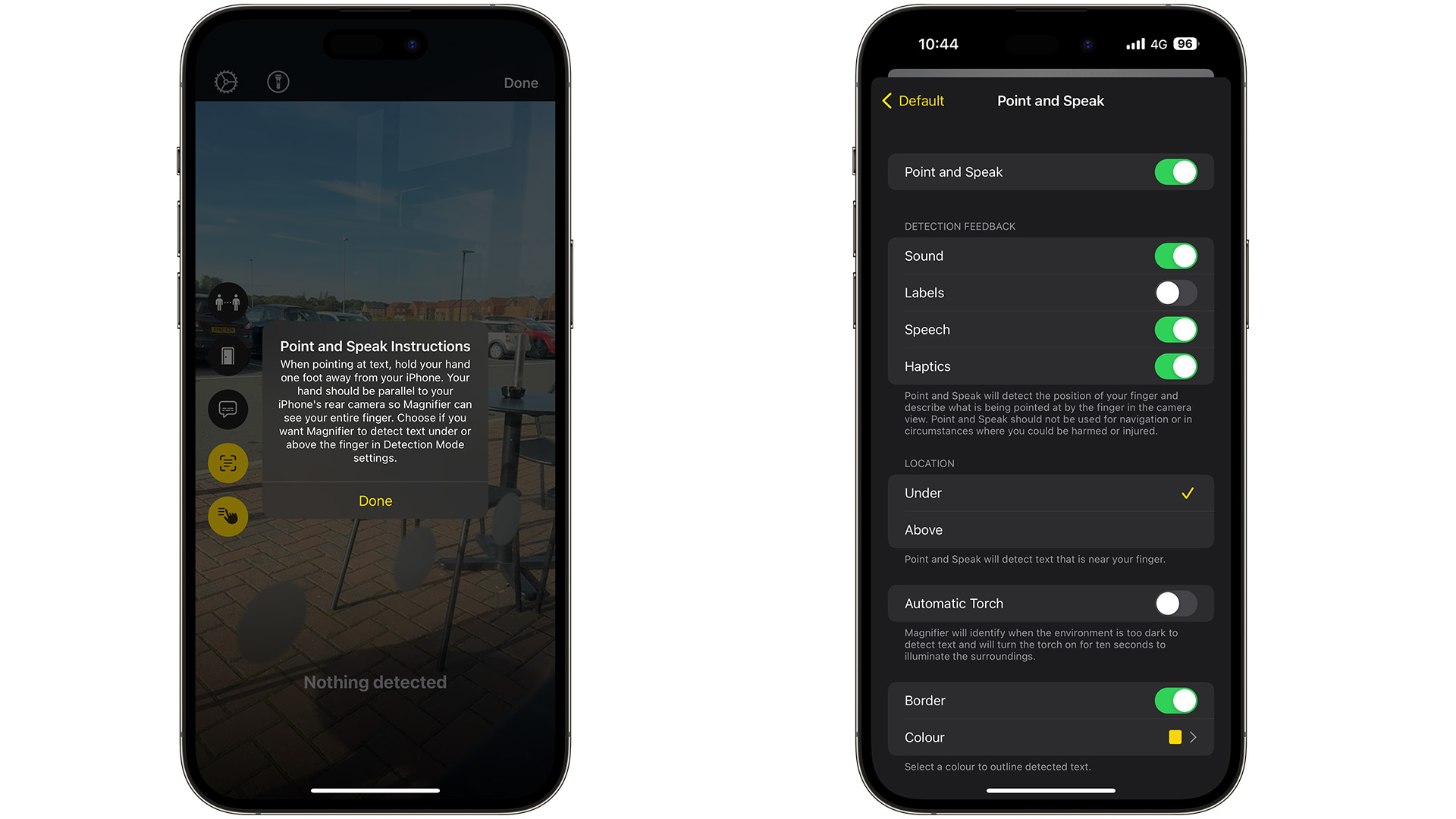

Point and Speak

Point and Speak is a new feature in iOS 17 that appears in Detection Mode within the Magnifier app, and can be found below the Image Descriptions toggle. This can describe almost any object in front of you, so you know where you are at that moment.

Point your iPhone at a keypad for example, and with your other hand, point at certain numbers. Point and Speak will tell you what you’re pointing at, giving you a better idea of what numbers you’re putting in.

While this is going to be very helpful for many, it’s only going to be supercharged with the iPhone 15 Pro Max. Not just thanks to the improved camera, but the bigger screen and better battery life will help those who may have planned a day out somewhere. They may be using the Magnifier app for most of the day, which will drain the battery of devices with lesser battery life.

The iPhone 15 Pro Max, however, has the largest battery capacity of all iPhones currently available. I’ve been able to get a day and a half use out of mine, and that includes me using the Camera app for the majority of that time.

A camera isn’t just for taking photos of your food

Apple puts accessibility at the forefront of the products that it makes. The proof is in these features, alongside Personal Voice, virtual game controller support, Live Speech, and much more.

Magnifier has quickly become one of the most important apps on the iPhone for a certain group of users. For those with visual impairments, it can help them open doors to enter a Library, or it can recognize different objects when cooking for instance.

With the iPhone 15 Pro Max, all of these features are only supercharged. From the A17 Pro chip to the improved camera and display, it’s the ultimate accessibility device.

Daryl is iMore's Features Editor, overseeing long-form and in-depth articles and op-eds. Daryl loves using his experience as both a journalist and Apple fan to tell stories about Apple's products and its community, from the apps we use every day to the products that have been long forgotten in the Cupertino archives.

Previously Software & Downloads Writer at TechRadar, and Deputy Editor at StealthOptional, he's also written a book, 'The Making of Tomb Raider', which tells the story of the beginnings of Lara Croft and the series' early development. His second book, '50 Years of Boss Fights', came out in June 2024, and has a monthly newsletter called 'Springboard'. He's also written for many other publications including WIRED, MacFormat, Bloody Disgusting, VGC, GamesRadar, Nintendo Life, VRV Blog, The Loop Magazine, SUPER JUMP, Gizmodo, Film Stories, TopTenReviews, Miketendo64, and Daily Star.