Accessories & Toys

From speakers and headphones to keyboards, stands and docks to the vast world of iPhone cases and Mac slip covers, there are tech accessories galore. iMore's industry-leading experts scour the web, test the best products, and bring you reliable, trust-worthy advice about all the accessories you need for your tech lifestyle.

Latest about Accessories

This new 4K projector is tempting me to replace my LG C2 TV

By Daryl Baxter published

Nebula's newest projector has already replaced my 4K television — but it comes with a price.

Anker launches its new MagGo range — and its 3-in-1 charger could be my new favorite

By Daryl Baxter published

Anker has announced its updated MagGo range for 2024, which includes new power banks, stands, and more.

These are the 7 accessories I'm buying for my iPhone 16 Pro upgrade

By Daryl Baxter published

With my iPhone 16 Pro arriving on September 20, I'm already planning to buy these accessories for it.

These 4 accessories have supercharged my Mac now that macOS Sequoia is here

By Daryl Baxter published

With macOS Sequoia now available, I've bought these three accessories to help me multitask to the next level.

No, really - FineWoven isn't dead as Apple reveals new iPhone 16 accessories made from the controversial material

By Lloyd Coombes published

Apple's FineWoven material hardly flew off shelves last time, but the company is trying again.

On eve of iPhone 16 launch, UGREEN announces new line of charging devices

By Daryl Baxter published

Prolific accessory maker UGREEN announces a bunch of charging accessories to help keep your iPhone juiced up.

Beats Studio3 ANC wireless headphones are 60% off in this UK deal on Apple headphones

By Connor Jewiss published

Beats' Studio3 ANC wireless headphones are 60% off in this UK deal on Apple headphones. They might be a little older now, but they're a top set of cans.

Sandisk announces huge 8TB SD card for all your photo-editing needs

By Daryl Baxter published

Sandisk has announced a world-first 8TB SD card that can store all your projects in one place for safekeeping.

Logitech won't charge you a subscription fee for your mouse after all, but was it such a terrible idea?

By Oliver Haslam published

Logitech says that talk of a "forever mouse" that would require a subscription to use was just a thought, and it isn't going to happen. But would it really be that bad of an idea?

Anker wants to be your iPhone’s desk and vacation buddy, launches updated Charging Stations, USB-C wall chargers and cables

By Daryl Baxter published

Anker has announced its updated Prime range for 2024, which includes new charging stations, wall chargers, and more.

The Anker MagGo 3-in-1 Wireless Charging Station has become an essential part of my office setup

By James Bentley published

Anker’s MagGo 3 Wireless Charging Station has sat on my desk for a few weeks now and is a near-essential piece of the setup.

Best Wireless HDMI Video Transmitter 2025

By Tammy Rogers last updated

Want to play your game console or stream shows on a set top box to another TV in the home without using Wi-Fi? Then you need one of the best wireless HDMI video transmitters.

Best Headphone Jack Adapter 2025

By Tammy Rogers last updated

Looking for a headphone jack adapter? We've selected the best on the market to help you decide which one to buy for your iPhone or iPad.

Police officer charged with stalking after an AirTag linked to him was found in a victim's car

By Oliver Haslam published

A Lancaster Country constable has been charged with staking after an AirTag linked to his Apple ID was found in a man's car.

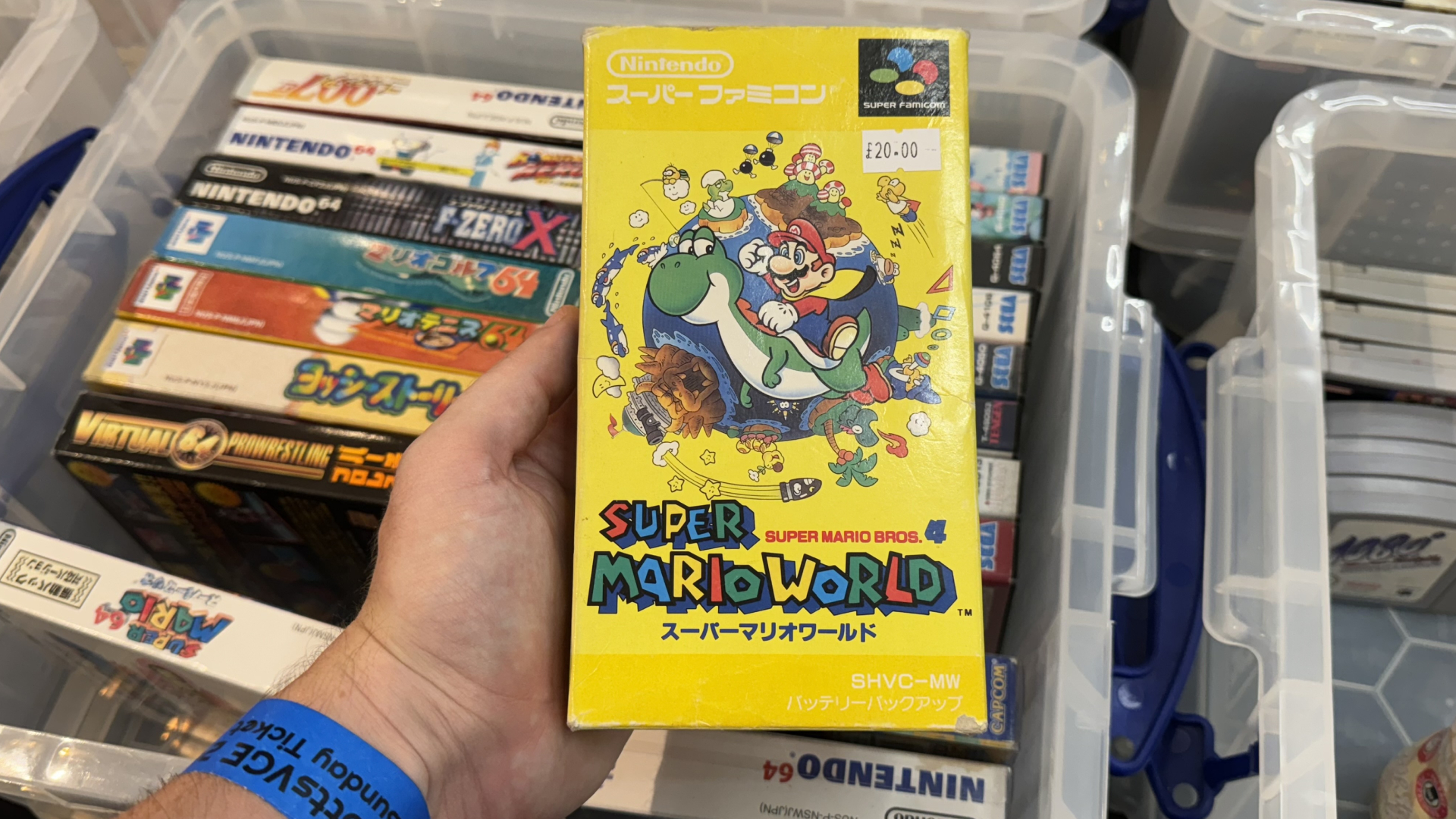

These 3 accessories made sure to keep my iPhone, Mac, and Nintendo Switch charged all day when I visited a Gaming Expo

By Daryl Baxter published

After making a day-long trip to an event, these three accessories kept my iPhone, Mac, and Nintendo Switch fully charged!

I'm a veteran podcast host, here's the only microphone I'd buy this Amazon Prime Day

By Daryl Baxter published

Prime Day I’ve been podcasting for over five years now, and this microphone deal is tempting me to switch from my trusted Yeti mic.

High-score! The best iPhone controller I've ever reviewed is now ridiculously cheap on Amazon Prime Day

By Stephen Warwick published

This Backbone One iPhone controller is the perfect gaming companion, and now it's just $69.99 in the Amazon Prime Day sale.

This Portable Prime Day monitor deal is so ridiculous that you may as well buy two of them

By Daryl Baxter published

Prime Day Portable monitors can give you that two-screen workflow wherever you are — even on a plane.

A vertical mouse saved my wrist from years of pain — if you only buy one product this Prime Day, make it one of these

By John-Anthony Disotto published

A vertical mouse changed my life. So, if you've got wrist pain from working on your computer, this should be the only thing you buy for Prime Day.

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!